Consider the Llama

Are closed source AI models doomed?

The same issue exists in AI today. The frontier is moving too fast, and the frontier of the entire AI academic and industry research community almost certainly has more firepower than your single company… As such, you don’t get any lasting value unless you’re able to make that year or two advantage actually count in building a lasting moat—which, if you think about it, is actually extremely hard.

I wrote this publicly in September 2023 in (still) one of my most popular pieces, “Most AI Startups are Doomed”, but have been saying this for much longer.

A few days ago, Meta released their 405B parameter model for Llama 3.1, and improved their already released smaller models (8 and 70 billion) with larger context windows and better performance across the board.

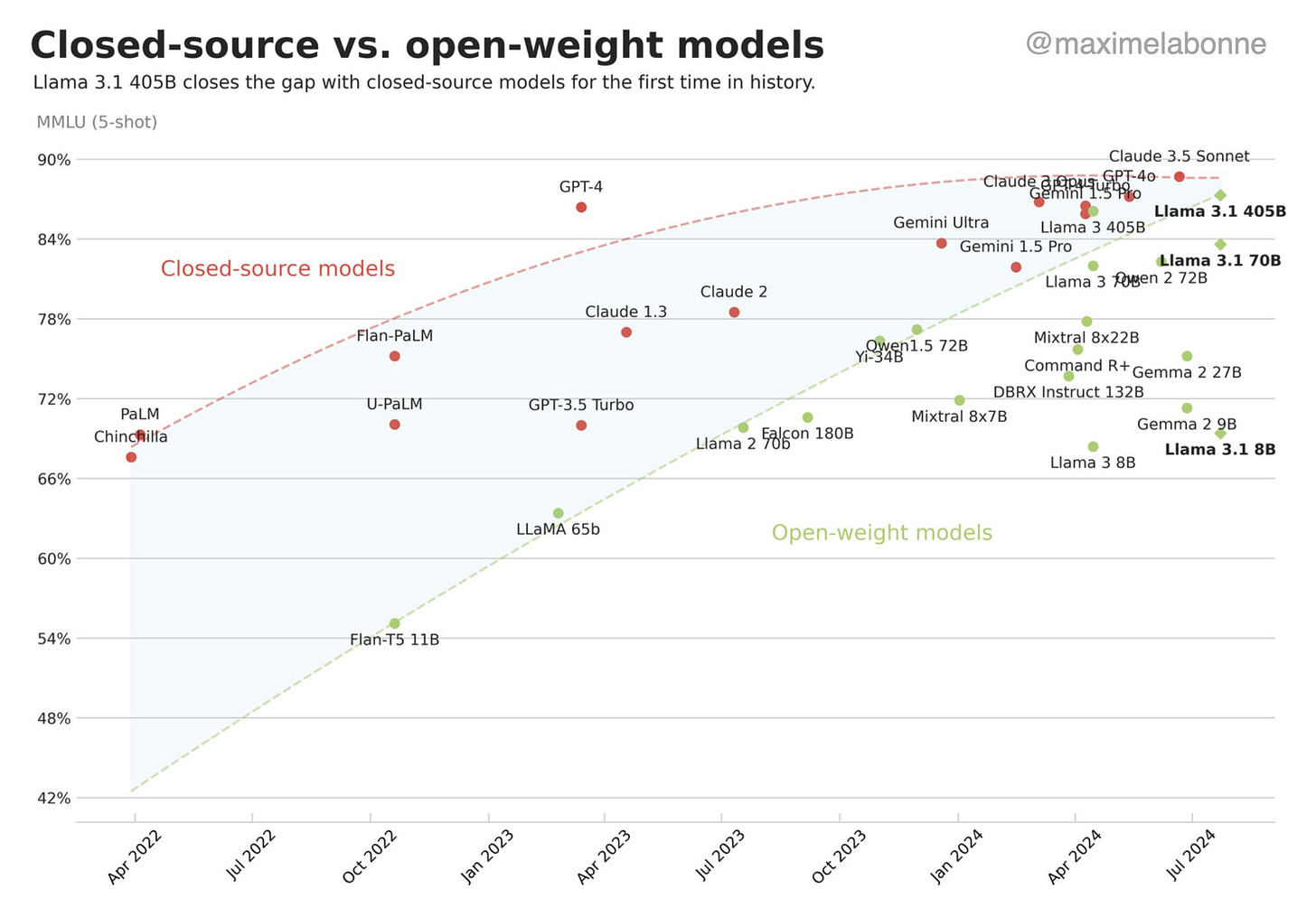

With this, we’ve now seen open-source models reach closed-source model capabilities on various benchmarks. Where OpenAI’s ChatGPT seemed untouchable, they’re now quite touched. For a company that’s on track to lose $5B this year, this is, in my professional opinion, a problem. As such, staying on-theme with what people seem to like from this Substack, let’s talk about doom again—specifically, are the closed-source AI companies doomed?

This will not be an in-depth look at how amazing Llama 3.1 is—there are excellent researchers and writers who have already wrote great pieces I suggest you read if you want that. Here, I’m going to reemphasize the point I made years ago, talk about how much of an issue this is for companies like OpenAI, and also talk about the interesting directions that specialist models have taken (vs. “omni-models” like ChatGPT and Gemini).

Pure, closed-source LLM model companies have a problem

A key difference between Meta and closed model providers is that selling access to AI models isn’t our business model. That means openly releasing Llama doesn’t undercut our revenue, sustainability, or ability to invest in research like it does for closed providers. (This is one reason several closed providers consistently lobby governments against open source.)

Mark Zuckerberg wrote this a few days ago in a piece titled, “Open-Source AI is the Path Forward”—it was both a manifesto and a victory lap accompanying the release of Llama 3.1 405B.

It’s definitely worth a read. He makes valid points about why Linux ultimately took off, and argues that AI will also follow a similar path. He discusses the need to have transparency and that progress is faster with a broader community of minds than any single company—which I concur with and is also something I’ve said before.

None of this is particularly surprising.

However, it’s still worth emphasizing his point that “selling access to AI models isn’t our business model.”

Indeed, it isn’t. The better these models are, the better (or more insidious, as some might say) the experience on Facebook, Instagram, and similar is. In fact, this is the same as with Google. The better these models are, the better their “search” (or whatever it becomes) is… And, much, much more importantly to both Meta and Alphabet, the better their ad targeting is.

Do you know what companies this doesn’t apply to? OpenAI, Anthropic and the like—companies that sell access to AI models. These companies inherently require their products to be much better than open source in order to up-charge. They also don’t have some other product they sell that gets improved with better AI overall.

(X.ai does technically exist as well, though we’re sitting waiting around for the quite overdue Grok 1.5 non-preview)

Now, their lofty valuations—which I’ve been on the record multiples times in saying I don’t think they’ll be able to ultimately justify—require much more than just “better.” Most of them are basically priced to dominate the world with their mono-models monopolizing AI. Alternatively, they are priced for an oligopoly overseeing a massive new economy of LLM-driven AI. Either way, we’re talking about mega/mono-model dominance.

Let’s put aside this vision, which seems increasingly unlikely. They can still be great businesses, similar to Adobe products commanding a massive premium even though plenty of strong open-source, free competitors exist. However, part of that is the UI, tools, and ecosystem of the open-source competitors are clunky. Sure, GIMP and Krita are great. But most professionals will still not be happy to dump Photoshop or Illustrator and be forced to use alternatives (Canva, on the other hand…). You can have a plenty reasonable business with just a better product that requires forking over a bit of money. Unfortunately for these closed-source pure play AI companies, the open-source AI models are not materially worse anymore.

Burning $5 billion to keep OpenAI’s models as good as Meta’s is definitely a losing proposition. The worse part is the burn can’t stop because if it does, these open-source models will definitively pass and stay past OpenAI/Anthropic/etc.

Pure model-peddlers aren’t the only companies that exist though

Note that I didn’t mention Mistral. Or Microsoft.

Mistral is a weird one. They are the standalone open-source model company. They are also French, which is a problem if the EU gets cut off from NVIDIA GPUs and the rest of the world’s models. Putting aside the EU shooting itself in the face continuously, Mistral’s bet is that their approach engenders more trust and adoption, similar to Linux, Docker, and other “infrastructure” technologies. No company wants to be locked in without recourse, even if they’re happy to pay massive sums of money for support and continued development of the technology in areas they care about.

I’ve long said that AI is quite different from traditional software, but this is more a business operation question—avoiding a “single point of failure” and counterparty monopoly pricing power is worth a lot. This gets customers on board fast enough with open-source options to make it a surprisingly profitable business model.

Does this all mean Mistral will succeed? Maybe, though they face plenty of headwinds. Aside from the EU business, they need to build up a massive amount of computing infrastructure, continue to maintain it, and continue to pour money into R&D without easy ability to amortize these investments (i.e., Meta and Alphabet can spread these massive investments across their operations). However, their refined, forked, and specialist models give an interesting argument for why this could work. Codestral—Mistral’s specialist code-model—is a compelling application of this concept.

On the other hand, Microsoft is selling access to models, most notably, OpenAI’s. However, this is still different. It’s definitely not Microsoft’s only business. Just like Meta and Alphabet, they can apply improvements in AI (from anyone) to their overall business—in Microsoft’s case, to their enterprise software offerings. Finally, they are also happy to just “stock” any product in their cloud computing “store.” Whether OpenAI succeeds, Azure can make money just fine, offering easy access to anyone’s models. The same applies to Amazon, basically entirely analogously in terms of AWS and Anthropic.

Monopoly Model or Specialists?

Finally, one of the big questions—which also matters a lot to OpenAI, Anthropic, X.ai and similar—is whether a mega-model/omni-model is the way the world will go, or if it’ll splinter into much smaller, more specialized models for specific tasks.

The jury is still out, though for a while, the mono-model argument was winning. ChatGPT, most notably, is not only a big mono-model, but also multi-modal (yes, I know there are different versions, including with 4o and 4—that still doesn’t really change the argument). One model to dominate them all. Gemini also follows this logic.

It was winning for a while because these giant models exhibited interesting emergent properties where they got very good at tasks that they weren’t explicitly trained on. This was in part because they could implicitly apply knowledge transferred from one application to another. This is actually a super-important concept (in general, but also as an investor in AI) called transfer learning.

The aforementioned Codestral (coding specialist), Mistral Instruct (kind of “mono-model” but also specialist with wide “world knowledge”), or Deepseek Coder (as one can guess, also a coding specialist)… all of these are examples of specialist models that excel at specific tasks.

OpenAI itself also has these, including Whisper, which is both open-source and a specialist in voice-to-text translation/transcription (though this is a little different, since it’s a neural network but not an LLM)—though, of course, it isn’t their focus.

Nevertheless, the advantage of these models is generally:

They require far fewer resources in computation and memory

They work very well for their specific tasks

A lot of them can be run on-device, cutting down on latency and potentially cost

The downside is most of them still aren’t better than the mega-models, even in their specialist areas. But the tradeoffs are often worth it. In fact, Apple Intelligence is mostly taking this route and only offloading to a mega-model—in this case, ChatGPT—when the internal, specific models can’t handle it.

Where does this leave us?

Well, the future is definitely still in flux within the AI sector. Nothing is set yet, and I still think that most AI startups are actually doomed. Money within the sector still is, in my opinion, massively misallocated.

However, things change really fast.

We’ll see how things play out, but as it stands, the world is going towards what, I think, is a reasonable prior: AI will be broadly available (and the closed-source model companies will get commoditized). Interestingly, against at least my prior, the possibility of many more specialist models being the dominate modality seems to be rising.