Fear and Loathing in 7nm

How much does Huawei and SMIC's new chip matter? And what should the US do?

In case you haven’t heard the news, US sanctions have failed, China has achieved semiconductor independence, and the US must wage total economic war to have a hope of succeeding in changing this story. Semianalysis’s piece here is excellent, but I think overstated.

Many other sources, including Jordan at ChinaTalk, have a somewhat more nuanced take as to what Huawei’s achievement means.

If you want a deep analysis of the technical guts of the development, go read the pieces from Semianalysis and ChinaTalk. Here, I'll do the executive summary plus some thoughts as to implications.

Wait, what happened?

Huawei released a new smartphone, the Mate 60 Pro. In and of itself, it’s a bit mind-boggling that this is making such a splash in semiconductor and China analyst circles, but this is because:

The chip is built using a “7nm” process (which is behind TSMC’s leading edge, but equivalent-ish to Intel’s leading edge)

The chip has a 5G modem that is as good as Qualcomm’s

Huawei did this with SMIC—both of these entities are heavily restricted in equipment, IP, and component sales from US restrictions

In summary, this might mean that China has “broken through” the US’s blockade of advanced semiconductor technologies reaching China. In particular, this means that the attempt to restrict China from the leading edge (best, smallest, most power-efficient) chips by taking away the ability to access equipment and IP from US, Dutch, German, and Japanese firms seems to have failed.

But have sanctions failed?

Note that I said it might mean that. There are some big questions that still have not been answered yet:

What is the yield? If all you care about is building a leading-edge chip, you can have some poor postdoc or PhD practically build up a chip atom-by-atom, or use some other utterly unscalable process (e.g. build a million chips, hope that one comes out usable by sheer luck). That isn’t impressive. It’s just sad. Modern semiconductors aren’t a game related to “doing it once”—it’s about scale. No one cares you can make a single, or a few hundred, or even a few thousand chips. It’s about near-zero marginal cost at a scale of millions of them. Currently, everyone is guessing at the yield and we don’t have definitive answers.

How did they do this exactly? Was this done with domestic equipment? It’s unlikely, for many reasons. However, was this done with a lot of foreign equipment that SMIC and Huawei have free(r) access to? Maybe. There’s a more in-depth discussion of EUV, DUV, and whatnot that most readers here probably don’t care about, but suffice to say, it matters if SMIC barely made this work, or if they’re just getting started. With DUV (the older generation technology) there’s a hard technical limit to how much it can be improved, which means eventually advancement will hit a wall.

Was this done with or without help from international companies? Everyone knew that the sanctions were fairly “leaky”—I don’t think I remember a single serious analyst who thought that the restrictions would severely cripple China’s ability to get near-leading-edge today. However, there’s a question as to how much this was done with explicit “wink and nod” collaboration from companies like LAM Research or ASML providing support (“we have this technical problem… that definitely isn’t related to leading-edge chips”), or if was mostly Huawei and SMIC figuring it out themselves. The latter is both possible and better for China—it’s always possible to tighten the restrictions or for things to get “too hot” for the companies to be comfortable continuing to even provide support that has a veneer of being legitimate.

As a whole, if—worst-case for China—it’s a ridiculously money-losing operation, pushing the absolute limit of DUV, and with a lot of explicit technical support from US companies, this isn’t exactly much of a threat. On the other hand, if it’s an extremely high yield from China figuring out how to make do and advance their domestic capabilities, we’re likely to continue to see other cutting-edge chips continue to come out. The reality is likely somewhere in between.

So, should we wage all-out economic warfare?

At the end of Semianalysis’s piece, the authors basically advocate something akin to carpet bombing the Chinese semiconductor industry.

Biden has spent a lot of time declaring that he “doesn’t want to contain China.” This has some level of importance in turning down the temperature and march to potential kinetic conflict, especially with China making small, but important gestures (like disappearing the infamous wolf-warrior diplomat, Qin Gang). This would basically annihilate all US gestures and then some.

But let’s put aside politics—which is hard to do these days when you work in technologies that have extreme geopolitical salience like I do.

Is it a good idea to try to restrict China in this way?

Too much in one direction or another

I think extreme positions are generally silly. It’s an extraordinarily dumb argument that the US’s best interest is to just be completely unfettered in allowing technology, IP, money, and expertise to freely flow into developing China’s cutting-edge tech capabilities.

However, the other extreme is also silly to me. Trying to have government—at the speed of government—keep up with and specifically restrict technologies is impossible. Even private sector tech actors wouldn’t be able to do it because technology famously moves in disruptive directions that are impossible to predict.

To some degree, this isn’t what Semianalysis (and now, some other analysts) is suggesting. Although there are “specific targets,” my read on the list is it’s more of a complete chokehold. It’s a smart move—if you assume that everything will stay the same as it does now.

As a VC, I’ve seen too many times that technology does not stay the same, and that’s especially true right now.

There’s a risk that if we heavily restrict and protect our domestic (and friend-shored) technology industries, we might be fighting the last war.

A historical analogue

In the 1980s, Intel, the inventor of DRAM, exited the market. Japan was ascendent. Japanese companies had basically taken over the DRAM industry and semiconductors. A decade ago, western companies had scoffed at Japan, which just manufactured low-end goods. They had the cheap labor to do assembly and low-value-added activities, but they’d never have the creativity to actually seize a technological advantage.

The narrative by the 1980s and early 1990s had completely shifted. The co-founder of Sony and a famous provocateur politician (you might say “alt-right” in a modern US politics context) co-wrote a book called “The Japan That Can Say No,” chiding the US on its laziness, fundamental flaws, and inevitable decline as Japan takes on its natural place as the world’s technological and economic leader.

Of course, we have spoilers as to how the story ends. Instead of taking over the world, Japan’s inflated real estate market collapsed, the US put technological sanctions and restrictions on it (helping promote Taiwan and Korea), and US companies instead moved onto CPUs and other higher-value areas while DRAM became commoditized.

I obviously mentioned the first two points because it remarkably looks like what we’re seeing in China today.

The last point I put because I’m making the point that it could be a parallel… or we could change it, potentially to our detriment.

The Innovator’s Dilemma

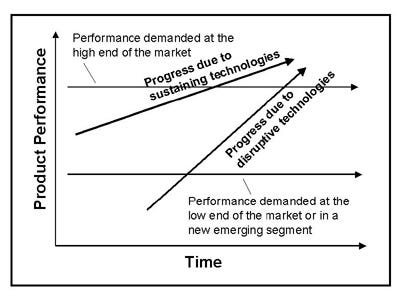

If we overprotect our industries within the realm of leading-edge semiconductors, it’s possible we miss the next disruptive innovation. As per Clay Christensen’s Innovator’s Dilemma, disruptive new technologies do not tend to be better than incumbent technologies at first—which makes it hard to justify making a conscious effort to invest in them—however, in the long term, they tend to overtake and dominate the market when they reach maturity.

An example most will remember well is Netflix vs. Blockbuster. At first, streaming was slow, expensive, and unfamiliar to the market. It was hard to justify why Netflix would ever succeed against Blockbuster. At the same time, Blockbuster and eagle-eyed analysts could see the potential danger of streaming, but it was hard to justify a massive investment that would make Blockbuster’s existing contracts, and massive inventory of home videos, completely obsolete. As a result? Blockbuster eventually rode their original advantage all the way into extinction.

I don’t know what comes next as a disruptive innovation. Quantum or photonics chips (I, personally, don’t think so, but who knows)? Something we can’t imagine right now? It’s hard to say, but I’d be careful in overprotecting, coddling, and making our incumbents even more insulated from competition.

To some degree, I understand why analysts are calling for the restrictions they are.

But I’d argue that the implicit premise is that the US’s technological advantage is purely because of a historical head-start.

I vehemently disagree this is the case—we’ve seen this catch-up picture before, most recently with Japan. The US’s true advantage isn’t the current technological base that it has, but its ability to keep innovating and reinventing itself. It’s essentially the ability to keep disrupting ourselves. There are some current worries, especially within physical construction, politics, and similar in terms of the US “stagnating”—but so far, as we’ve seen with the explosion in AI adoption, it hasn’t happened in broader US tech.

I’d be careful in locking us into the current semiconductor paradigm because it’s hard to know what the future holds. I also am pretty sure (due to history… and from my vantage point as a VC seeing these technological changes in deep tech) that what comes next doesn’t specifically look like what’s here now.