Ten Years of AI in Review

From 2015 to 2025

When did the current AI cycle begin? There are a lot of ways of slicing it, including all the way back to the mid-to-late 1980s, as backpropagation—our current method of training deep neural networks—was rediscovered and made more practical. Maybe it was the mid-2000s, when tech companies were utilizing machine learning more and more. Maybe it was November 2022, when ChatGPT was released.

I personally say around 2015.

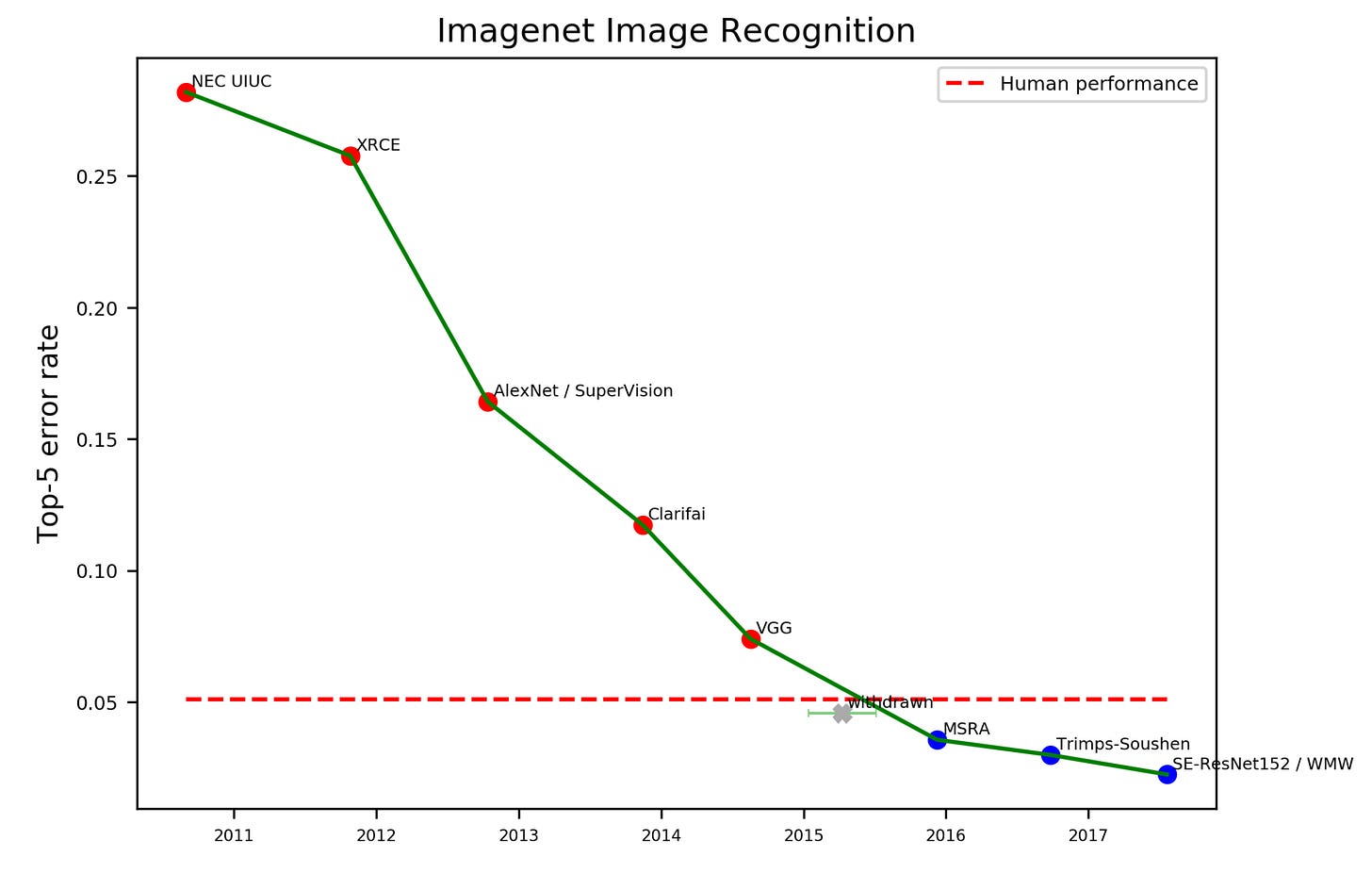

AlexNet, which was created by Alex Krizhevsky, Ilya Sutskever (among other things, co-founder of OpenAI), and Geoffrey Hinton, was earlier, in 2011-2012, but we hit human levels of performance around 2015, with the (very large model at the time) MSRA from Microsoft Research in particular.

Vision and recognition, one of our fundamental senses, was no longer the exclusive domain of humans.

It was also the same year that Yann LeCun, Yoshua Bengio, and Geoffrey Hinton wrote their excellent Nature review paper, “Deep Learning,” helping announce that it has arrived.

On a sentimental level, it’s also when I started pivoting toward AI in my career—starting from 2013, explicitly exploring machine learning, taking graduate seminars at Berkeley, and eventually starting investments in the field by 2016. But that was obviously correlated to seeing where the field was going.

I’ve always said 2015 (or thereabouts) was “when AI really kicked off,” but it’s also convenient that it now makes it a nice, round decade.

A Decade of AI Milestones

This is just a repeat of my chart above, but I know it’s hard to read on some platforms.

2015: Human-level performance in image recognition—quickly followed by progress in game-playing and machine translation.

2016: AlphaGo defeats Lee Sedol using a combination of deep learning, search, and reinforcement learning. Google publishes “Attention Is All You Need,” introducing the Transformer architecture.

2018: BERT demonstrates that natural language processing need not be task-specific.

2020: GPT-3 shows that scale alone can produce general-purpose language models.

2022: Release of ChatGPT. Less a technical breakthrough than an interface breakthrough—AI enters public consciousness. Diffusion models (Stable Diffusion, DALL·E 2, and Midjourney) show that creative work is also within AI’s domain.

2023: The AI boom begins in earnest. Tool use, agents, and multimodal models make systems practically useful.

2024: OpenAI’s o1 is released. Reasoning becomes explicit, and inference-time scaling becomes a first-class capability—including with DeepSeek, causing the world (including China) to suddenly pay attention to Chinese AI.

2025: Open-weight models (including DeepSeek/Chinese models) and agentic systems proliferate. AI becomes less magical—and reliably useful.

Things to Point Out

Google’s Early Dominance

I like my crude visual timeline because one thing it demonstrates (in my selected events) is just how dominant Google, in particular, was at the beginning of the decade. I’ve had multiple individuals in the company laugh rather wryly that the company published the Transformer paper that made OpenAI’s accomplishments later possible.

OpenAI Seizing the Crown

Sometimes executives that I’ve spoken to at Google wonder if they should have released it openly—but, either way, the company essentially did next-to-nothing with the technology, allowing OpenAI to create GPT-3 in 2020 and then capture public imagination in 2022 with ChatGPT.

Diffusion in the Last Few Years

Although I specifically pulled out DeepSeek (and called out DeepSeek for 2025), the reality is that there is much more diffusion.

I could have also included Claude Code (and thus Anthropic) in 2024. But the point is that the number of players that one should pay attention to has exploded—especially in the Chinese market.

Zhipu and MiniMax are both launching IPOs in the Hong Kong stock market. Cursor’s proprietary model seems to be using Alibaba’s Qwen. In hardware, China has been pushing their native semiconductor capabilities, with the government mandating 50% domestic equipment use for chipmakers (perhaps allowing us to diversify from just talking about Nvidia over time).

In the US market, Gemini 3 obviously recently seized headlines (bringing Google “back”—not that they were ever gone). Nvidia acquired Groq’s assets in a $20B sale, showing various aspects of the hardware race continuing here.

From Relatively Unknown… to the Center of the Economy… to Just Another Technology

AI has become a larger and larger portion of the total economy. This is obvious from the US stock market, but it’s more than that.

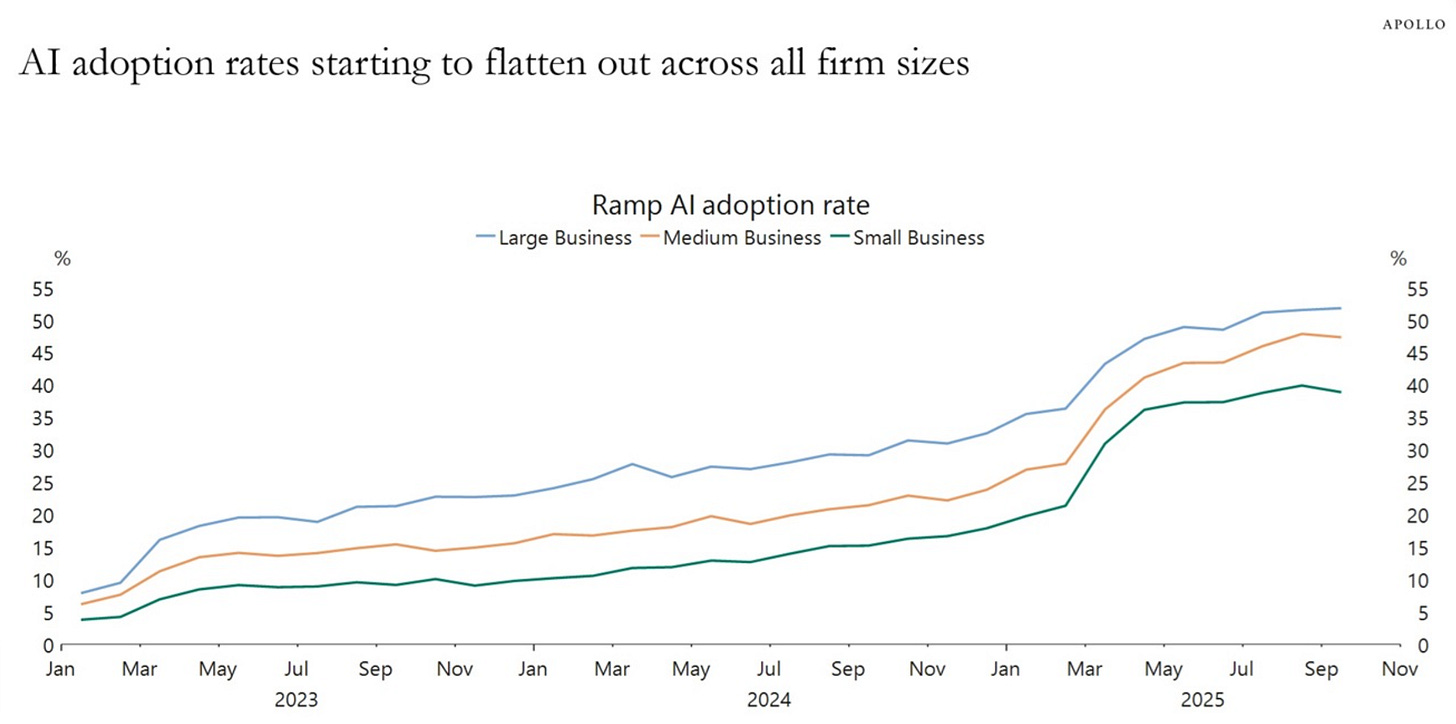

Even though AI adoption rates across the economy are starting to level out, we have more and more “boring innovation.” It has gotten integrated into companies at a shocking pace—a lot of it happening just within 2025.

I made this point in my last post, but the idea is essentially that AI will diffuse further into the economy in ways that don’t warrant headlines. It will just be as ubiquitous as internet use or electricity.

It’s just a technology being used, not something that will suddenly exterminate humanity, cause us to reach post-scarcity, or any other sci-fi-like AI takeoff scenarios.

What’s Ahead?

I’ve been predicting for a long time that “Most AI Startups are Doomed.” Part of that prediction, of course, is boring. Most startups die and are doomed.

However, the real question is whether or not we will see some kind of enduring advantage for, say, AI model companies. Google suddenly causing “Code Red” at OpenAI is just another day in AI headlines. The next time OpenAI or one of the Chinese companies releases their next frontier model, it’ll suddenly be “Code Red” at every other company.

Ultimately, without some kind of true barrier to entry, most AI companies will not enjoy some kind of amazing future where they dominate. OpenAI may be a strong consumer brand in ChatGPT. Anthropic has a pretty good API business and focuses on coding. But as the Chinese AI companies IPO’ing have shown, there’s actually not as much revenue as you’d think in their businesses and certainly far less earning potential. They all have the same models, data, and compute.

Nevertheless, I think the boring is exciting. The internet’s first generation was “exciting,” but a lot of its potential was later, quietly changing every aspect of our lives. After all, a ‘90s kid would recognize the early 2000s—it’s just what was in the 1990s, with less hype and more evolution.

A ‘90s kid would be lost with a smartphone-driven internet, remote work, and AI (which was spread with a ubiquitous internet). Not to mention ride-hailing, food/grocery delivery, e-tickets for everything, Apple/Google/Ali Pay… But, somehow, a lot of the 2000-2020 period just “wasn’t that significant” and “didn’t add that much productivity” in popular conception, versus the heyday of press attention on the internet as a technology.

Other News

If you haven’t had a chance, take a listen to my podcast with Grace Shao on AI Proem. It was a great conversation on Chinese AI, society and AI, chips, healthcare, and all sorts of fascinating areas!

Separately, my book is available in bookshops all over the US, but especially in the Bay Area. Check to see if it might be in your area!

This is a solid retrospective. What works especially well is that you track capability shifts without collapsing them into hype cycles. The throughline from narrow models to systems that can plan and act is clear.

One frame I’d add looking forward: the biggest change over the last ten years isn’t just better models, it’s where authority moved.

Tool invocation is the threshold.

When AI moved from analysis to action, invoking tools and changing state, the question stopped being “how capable is the model?” and became “how governable is the system?”

When artifacts become cheap and execution becomes delegable, output stops being evidence of understanding. Organizations can look productive while becoming increasingly illegible. That’s not an intelligence problem, it’s a control problem.

Output got cheap, so we added standards. Information got cheap, so we added traceability. Execution is getting cheap, so we need enforceable delegation.

That’s the Illegibility Crisis: leaders remain accountable for outcomes they can no longer clearly see, explain, or reconstruct once decision chains vanish.

More on that mechanism:

https://leanpub.com/illegibility_crisis

Collaboration with agents only holds if authority is scoped, revocable, and auditable. Otherwise it turns into speed without control.

I’ve been working on an open spec (DAS-1) as a thin control plane between agentic systems and actionable governance:

https://github.com/forgedculture/das-1

Artifacts are cheap. Judgment is scarce.

The frontier models feel like a commodity layer, while the real leverage is moving into data, distribution, and deeply‑embedded workflows.