In the past year, regulators have gotten quite excited about regulating AI. The EU AI Act. The US Executive Order on AI. And, of course, there have been numerous other proposals that have been bandied about by politicians around the world with about as much understanding of AI as they have of the Internet.

There’s been a few broad rationales about why AI needs to be regulated:

AI has the potential for mass destruction, similar to a chemical/biological/nuclear weapon (or maybe just slightly stepped down from it)

AI can cause societal chaos, from mass job loss, mass disinformation, or mass discrimination/inequality

AI is an existential risk to humanity and could follow the plot of Terminator or turn us all into paperclips

1) has usually been the impetus of some provisions, like in the AI Executive Order, in tracking large training runs. 2) has spurred many lawmakers to want to put hard limits and often ill-conceived restrictions on how one uses AI. 3) is typically the province of either bad-faith actors who themselves have a reason to monopolize AI by encouraging regulators to close the door to new players, or have little understanding of what modern AI actually is.

As one might be able to tell from how I’m writing about them, I think all these rationales are wrongheaded. More precisely, I think all of them have a seed of truth, but miss the overall point of what is more unique about AI versus any other technology.

Actual Risks: Malicious vs. Accidental

One of the big distinctions one has to make is between the risks from malicious use of AI vs. accidental or unintentional misuse of AI. There is a pretty big difference between the impact of one vs. the other.

Marginal malice

As a whole, I’ll assert that AI for malicious use is merely incremental. Why? Most uses of pure AI for malicious reasons are essentially just a slightly more advanced version of what’s out there.

Mass cyberattacks. AI can make these attacks more adaptive and even easier to automate. However, as many corporate entities and anyone who’s left a default port for MongoDB open and immediately got ransom-wared, AI wasn’t exactly needed to make this a widespread problem already.

Propaganda, disinformation, state-sponsored destabilization and unrest. Ah, the non-stop discourse about Russian troll farms leading up to and immediately after the US 2016 election. Of course, they never disappeared or even materially stepped down their activity. And now there’s also TikTok. Of course, these things occurred even before this generation of AI, and far before that—Radio Free Europe wasn’t meant to be fair and balanced, after all. AI may help intensify these issues with deep fakes and easier generation of content, but it’s also merely a tool that further amplifies what’s already there.

Advertising/marketing to vulnerable populations, scams, and elder abuse. Certain ad industry friends may bristle at me lumping them in with “I’m calling from Microsoft and need you to buy 500 Google Play gift card” scams that often target the elderly—or more broadly in a section with Russian troll farms—but we’re painting with broad strokes. This is just the more nakedly commercial version of the above. After all, putting sugary, healthy cereals at specifically eye-level with children is not exactly an unambiguously fair/moral way to target consumers. However, most people know exactly what I’m talking about already shows that this is also already going on, even if AI can make it incrementally more personalized.

The reason AI isn’t that special here is we were already automating many of these things.

Spy-craft, HUMINT, and psychological manipulation of the masses were the “handcrafted” version. In more recent times, we’ve used “data science” (remember when that was a buzzword for a few years?) and computerized automation to handle these. If we rewound all the way back to pre-computers and suddenly introduced AI, yes, it’d be a big deal.

However, at this point, adding AI usually doesn’t add that much more marginally to the revolution of automated attacks, ad campaigns, and the free-for-all of the internet information ecosystem. Nonetheless, these greater impacts are real, just overblown.

Accidental atrocities

The realm of accidental misuse, for that same reason, tends to be much more severe. In many areas where AI has suddenly made automation viable, the benefits are all new. So is the damage and potential for misuse.

Recruiting had some tools, but it was mostly manual because keywords and other easy filters are famously poor at screening candidates. Admittedly, humans aren’t good at it either, but it’s (somewhat) better than nothing. Ask them how they screen, though, and it becomes fairly clear that they have no idea. That doesn’t make automation very promising. AI, however, theoretically changes that by being able to take a gestalt and, more importantly, handle variation (and, in reality, joint probabilities) that typical “dumb” algorithms cannot.

That’s why, all of a sudden, AI enabled things that static algorithms and simpler statistical models could not:

Self-driving cars: new roads and situations are novel, after all

Construction: no building is ever exactly as drawn, no room is ever truly square

Diagnostics: the dizzying complexity of health information makes traditional decision trees completely fail, and one needs a little “heuristic” fuzziness to make good decisions

These are all actually areas we invest in at Creative Ventures, and I believe in. However, while these are good things, they are all enabled by adapting and self-learning models that can deal with variation… or at least appear to.

Most of the cases the risk is greatest in is not actually physically manifested (like self-driving cars), even though self-driving cars areactually a hairy problem and dangerous. The thing is, most people are aware it’s dangerous. The greater risks are from applications that are hidden—where individuals with sudden access to easy AI, without much oversight, throw it at problems without understanding its limitations and problems.

A lawyer tried to use an AI in court, and it cited fake cases. ChatGPT misdiagnoses 8/10 cases of pediatric case studies (you know some parents have used it for that). Despite Amazon’s experience, companies are (reportedly—and probably) still using AI for hiring and firing decisions.

I can’t even list all the misuses—it’s up to distributed, individual actors’ imagination (and misadventures) that are as numerous as the thoughts in the collective minds of humanity.

This stuff is boring next to the Terminator movies or Mission Impossible Dead Reckoning Part 1, which helped inspire the AI Executive Order (I’m kidding, mostly). However, the real danger is it’s impossible to track, and we suddenly have the equivalent of children with scissors running around using AI without understanding its limitations.

But what about killer robots?

If you notice, whether malicious or intentional, my outline of real risks involves humans. So, what about our GPU-hungry overlords, who are obsessed with paperclips in our darkest imaginations?

The UK’s entire AI summit was about it—though, transparently, to most because it needed some kind of reason for people to pay attention to it. Elon Musk is seemingly constantly talking about it. Tech leaders talk about it and write scary letters about it, all while they themselves (Elon Musk included) plow ahead with development, hoping that it slows down their competitors.

I admit, it’s actually quite hard for me to take existential risk at face value, given how unserious most of its promoters are. Unserious, meaning the actual people in certain cases, but often that the people themselves (who can be serious) don’t seem to actually take their own argument seriously.

But let’s engage in good faith with doomerism at the moment. Why do I think it’s flawed?

This isn’t actually the taxonomy of doomerism (again, I will restrain myself from a long rant about there not actually being a taxonomy given the incoherence/internal contradiction of most arguments), but I’ll break it down into two categories:

Hyper-intelligent, “singularity” AI that surpasses our control suddenly

Dumb AI that accidentally wipes us all out because it misinterpreted some command

The first is the plot of Terminator and the SkyNet AI villain (and most Hollywood/science-fiction AI horror—including Dune). The second is Instrumental Convergence (i.e., the Paperclip Problem).

Hyper-intelligent but all-too-human?

One of my biggest issues with the hyper-intelligent but hyper-aggressive AI is the anthropomorphism of it.

AI isn’t human. It doesn’t have human urges. It doesn’t have our anger, jealousy, fear… or even the instinct for self-preservation.

Almost all science fiction or movie that has an out-of-control AI needs some aspect of the human built into our artificial intelligences, who—presently—have none. They are basically high-dimensional, fancy curve fitting algorithms. Generative AI then pulls stuff out of that curve (learned statistical distribution).

It’s oversimplifying, but not enough to take away the point that a curve fitting algorithm does not have motive.

Could we have human-like AI with emotions and urges? Yes. One science fiction series has—I’ll avoid stating which to avoid spoilers—AI actually being digitized brains of infant humans, in theory replicating all functions and future functions (though I think developmental biology would object as to how much of that information is “brain-scannable” vs developed from DNA/development/puberty). Another one of these “bottoms-up AI” in an entirely separate series is HALO, where Cortana and other AI are actually scans from humans. In either case, we can at least understand why an AI can be vengeful, resentful, or scared for its life. It is human.

Another potential: genetic algorithms that from first random, and then deliberate selection pressures at least have some level of residual “self-preservation” that is somewhere within its internal, implicit policy. This seems much less likely to have such complex human motivations, though. All genetic algorithms today have much more constrained utility functions, though at least it isn’t entirely ridiculous or impossible.

Regardless, none of our most advanced AIs are generated this way—not at all for the first, and not in such a way that could give rise to this kind of emergent behavior at this point.

Dumb, but smart enough to be a threat

Humans are scary. Like, actually, we have been and still are the cause of mass extinctions. Maybe all this stuff about extinction is just projection—kidding… I think.

If you want science fiction about it, vs. our typical imagination that humanity is the global average of all species (like in most fantasy or science fiction RPGs, which have a hard time visualizing our normal as remarkable), you can go find HFY type stories.

So, if you want to propose that an AI is dangerous, out of control… but somehow also too dumb to understand a specific command, and yet smart enough to outclass humans… I agree with Marc Andreessen—that entire construct seems entirely untenable.

It isn’t to say something dumb can’t be dangerous. An out-of-control excavator does not have to be smart to be dangerous. One can also construct a mental image of a really horribly designed vegetable chopping humanoid robot that suddenly decides the family members are carrots. That’s also a very dumb, yet potentially very dangerous robot/AI.

However, that’s dangerous to individual people. To be societally dangerous, or, more to the point, existentially dangerous to humanity, we need something that can be dangerous to us all, despite all the weapons humanity wields, military strategy that humanity has, and (when necessary) ruthlessness that humans exhibit.

It’s really difficult to even come up with a scenario where that’s possible without some internal contradictions.

Killer robots, redux

So, as a whole, killer robots seem rather far-fetched. But what if we turn back to humans again? What about killer robots that are designed to be killer robots? I mean, of course, military applications of AI, drones, and robotics.

That’s a different story.

My problem with AI having motivation to kill? Not really an issue anymore. I would assume that there would be some failsafes for killer AI/robots to not rebound and kill its creators, but at least it starts with a concrete utility function and motive for why it would kill people.

I still don’t think it’s an existential threat.

We all haven’t bombed each other into nuclear armageddon, so it appears we have some empirical evidence that state actors have a level of strategic planning and forethought that would preclude most careless, self-destructive applications of killer AI.

This is still a class of “dumb AI” we talked about, just one that has a better reason to kill. It still doesn’t make humans less scary or able to fight it.

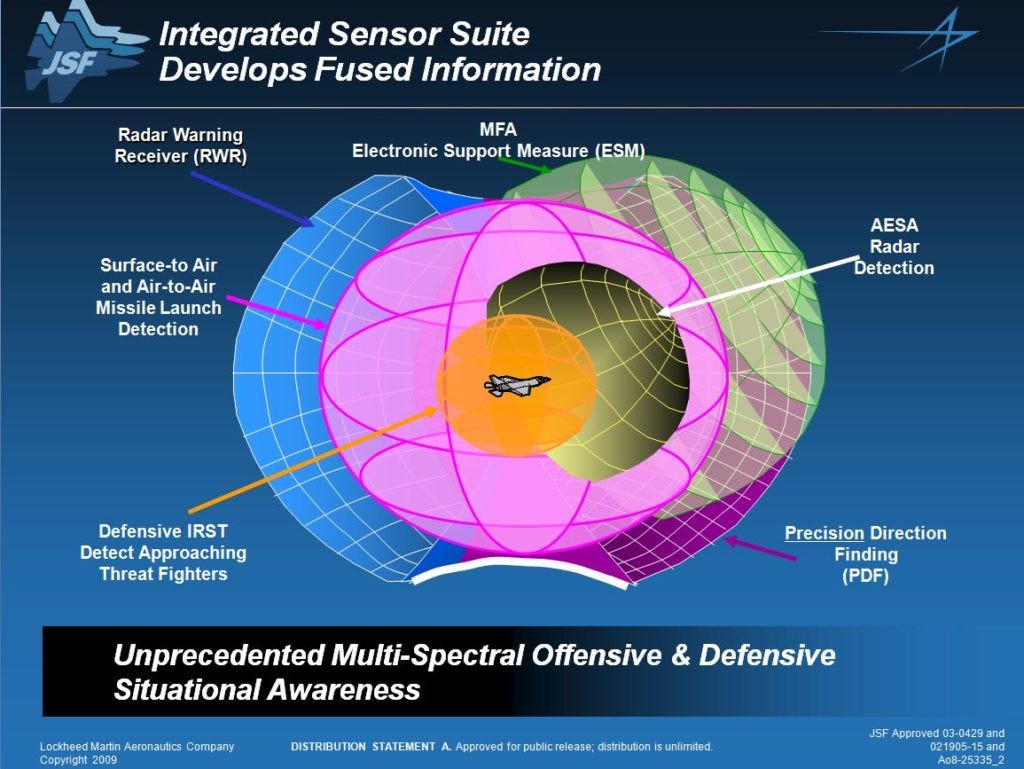

However, this does still come into our last class of deliberate, malicious risk that I didn’t cover before because this is a fairly different category. AI smart weapons, drones, and other systems. Thematically, it is still a “marginal malice” kind of thing—we already have smart weapons and all of those automation tools. The thing that makes the F-35 so dangerous is that it’s a big flying computer, after all.

Warfare is a different kind of marginal malice, given it’s meant to exert hard power and, ultimately, kill. Even marginal improvements are worth noting.

That all being said, it still falls into our framework. It’s human, which is what makes it one of the more dangerous (and real) risks vs. imaginary. And we also have plenty of tools to get most of the way to where AI takes us. Hyper-coordinated AI drone swarms that can decimate conventional military forces have been the future scenario of military thinkers, but Ukraine and Russia are doing just fine with deadly drones with limited AI.

AI is exciting. AI is dangerous. But it’s just another tool. The thing to be scared of is the upright ape that has the motive to wield those tools.