Why ChatGPT Strawberry o1 (and other LLMs) will Never be Good at Diagnosis

“Connectionist” vs. Knowledge-Based AI

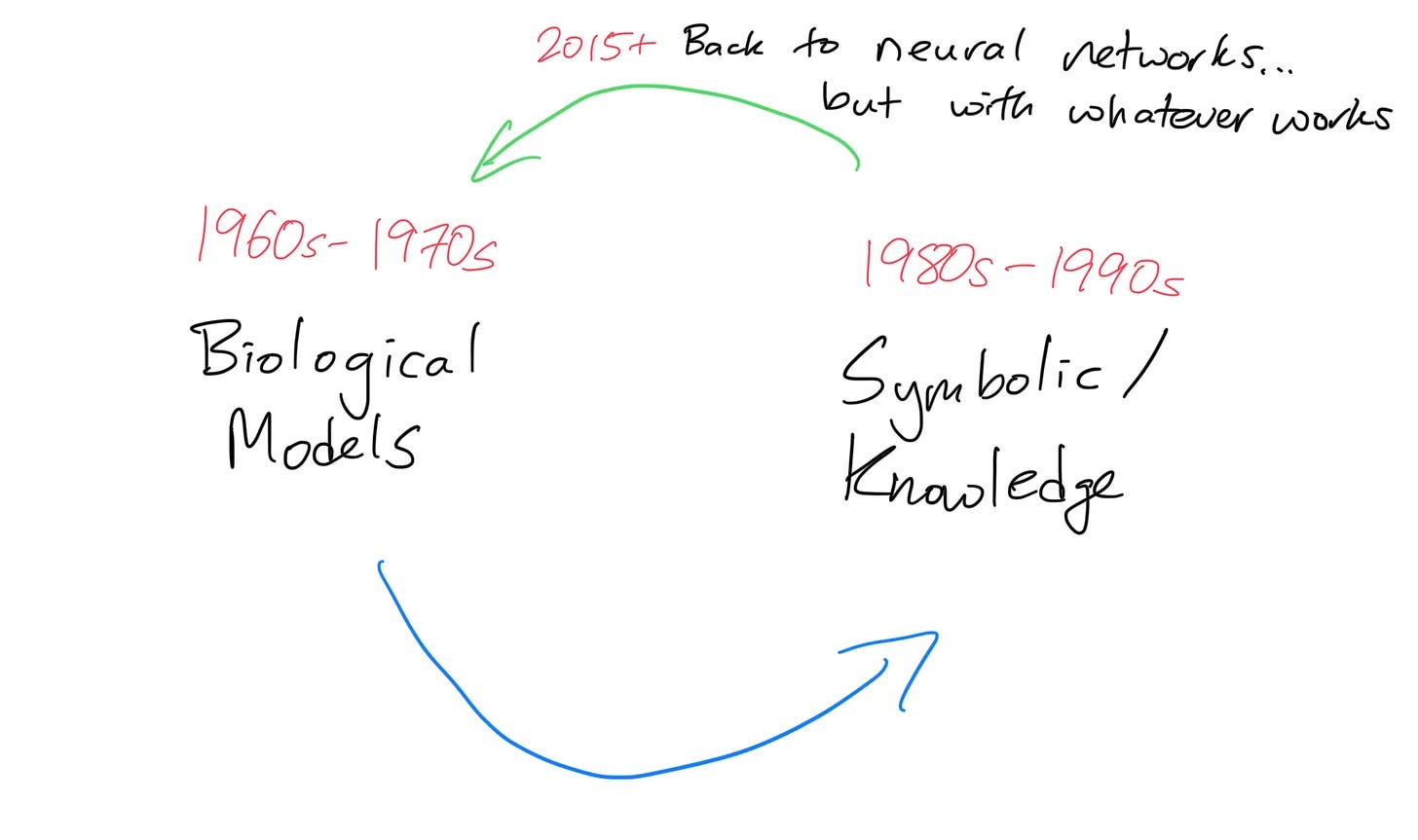

We just discussed the difference between “connectionist”/neural network models and knowledge-based/symbolic system AI, and there’s already a good case study of it.

Sergei, who writes a great Substack called AI Health Uncut, is an AI researcher, entrepreneur, and overall provocative guy (in a good way). As such, he, on brand, wrote a post titled: “Selling The New o1 'Strawberry' to Healthcare Is a Crime, No Matter What OpenAI Says.” The subhead is more descriptive: “4o is confidently misdiagnosing, while o1 is not only confidently misdiagnosing but also cleverly rationalizing the error.”

In it, he basically shows that there’s quite a bit of instability (variation in diagnosis) and hallucinations (wrong diagnosis, with a lot of convincing-sounding reasons for why) in the new Strawberry o1 release of ChatGPT from OpenAI. There’s also a colloquial assertion of some cherry-picking of data.

He guest posted this on Devansh’s also great Artificial Intelligence Made Simple, where Devansh also had a follow-up (with responses from someone at OpenAI). The summary is he doesn’t think the medical diagnosis results were “cherry-picked” with malice, but were certainly misleading and careless.

You should definitely read the posts themselves: they’re fascinating, and I’m not going to go into a blow-by-blow here.

Instead, let’s talk about why LLMs themselves will never be great at this sort of thing.

A quick refresher

Knowledge-based AI was the stuff that helped make AI enter an “AI winter” from the failure of expert systems, LISP machines, and other distinctly “1980s” aspects of AI.

However, as mentioned in my history post, Perceptrons and what would eventually become neural networks, actually came first.

The reason interest died is widely credited to Minsky, who wrote a textbook on the subject (yes, seriously). That textbook, Perceptrons, was published in 1969 and revised with an updated foreword in 1987, where Minsky not-quite takes credit for killing interest in neural networks.

It has sometimes been suggested that the “pessimism” of our book was responsible for the fact that connectionism was in a relative eclipse until recent research broke through the limitations that we had purported to establish. Indeed, this book has been described as having been intended to demonstrate that perceptrons (and all other network machines) are too limited to deserve further attention. Certainly, many of the best researchers turned away from network machines for quite some time, but present-day connectionists who regard that as regrettable have failed to understand the place at which they stand in history. As we said earlier, it seems to us that the effect of Perceptrons was not simply to interrupt a healthy line of research. That redirection of concern was no arbitrary diversion; it was a necessary interlude. To make further progress, connectionists would have to take time off and develop adequate ideas about the representation of knowledge.

To oversimplify, he doesn’t quite come out to say he did it, but interest certainly waned significantly. His core criticism, however, is one that he would hold for the rest of his life. “Connectionists” don’t seem to care about knowledge, which is core—in his view—to intelligence.

Let’s go even further. Minsky has a fascinating interview asking him about Stanley Kubrick’s 2001: A Space Odyssey in 1998:

Stork: Could you give a very broad overview of the techniques in AI?

Minsky: There are three basic approaches to AI: Case-based, rule-based, and connectionist reasoning.

The basic idea in case-based, or CBR, is that the program has stored problems and solutions. Then, when a new problem comes up, the program tries to find a similar problem in its database by finding analogous aspects between the problems. The problem is that it is very difficult to know which aspects from one problem should match which ones in any candidate problem in the other, especially if some of the features are absent.

In rule-based, or expert systems, the programmer enters a large number of rules. The problem here is that you cannot anticipate every possible input. It is extremely tricky to be sure you have rules that will cover everything. Thus these systems often break down when some problems are presented; they are very "brittle."

Connectionists use learning rules in big networks of simple components — loosely inspired by nerves in a brain. Connectionists take pride in not understanding how a network solves a problem.

Stork: Is that really so bad? After all, the understanding is at a higher, more abstract level of the learning rule and general notions of network architectures.

Minsky: Yes, it is so bad. If you just have a single problem to solve, then fine, go ahead and use a neural network. But if you want to do science and understand how to choose architectures, or how to go to a new problem, you have to understand what different architectures can and cannot do. Connectionist research on this problem leaves much to be desired.

I used a fairly large excerpt here because I think it’s fairly instructive and interesting in light of our modern LLMs—which are a form of artificial neural networks (because transformers are).

Minsky is a big proponent of methods that, at their core, have knowledge. Case-based is a big look-up table for cases. Rule-based uses symbolic relationships, formal logic, and programmed knowledge to come out with answers. These are transparent, deterministic, and always right if the knowledge is right.

This is precisely what Strawberry o1, an LLM, is accused of not being.

As Minsky says, “connectionist” research “takes pride in not understanding how a network solves a problem.” His disdain is palpable, and his case is a bit overstated, but it is true that it is extraordinarily difficult to understand why a neural network does what it does, and almost impossible to put hard guarantees around it.

The hallucinations are the point

LLMs are flexible because they don’t actually directly embed knowledge. It gets turned into weights and the LLM is a big, fancy auto-complete, but it doesn’t actually reason or think about the problem.

OpenAI has some evocative language around Strawberry o1 “thinking” and taking longer with inference (which has business implications on the cost of inference going up, and more compute being even better), but it’s not true reasoning or symbolic logic.

The exact reason why expert systems failed was because of their inflexibility. They could not generalize or tackle novel problems well because they often didn’t have the knowledge (because every field is ever-growing/learning) to concretely give an answer. In certain fields where “approximately right” is not good enough, like law or medicine, this is not necessarily a bad thing.

LLMs will always give an answer because it just needs to sound convincing—literally, from the aspect that it is a big predictor of what the next set of tokens/words is. It isn’t actually trying to reason to an answer, which is part of why LLMs using tools or plug-ins to get concrete answers has been an interesting area. Unfortunately, plugins and the GPT store have not exactly been rip-roaring successes.

Will we go back to knowledge-based AI?

Going back to my circle diagram, there are fields that require harder guarantees and more transparency than LLMs are naturally designed to give. While we can contort LLMs into something that they aren’t meant to be, it is likely better to find other techniques, tools, etc. to fulfill requirements that various fields have more naturally.

As such, maybe we will go back to symbolic/knowledge-based AI at some point, or LISP machines, and they won’t just be a relic of the 1980s—just as we came back to artificial neural networks and made those work.

If nothing else, it tells us that we aren’t yet done developing or even picking the lowest hanging fruit in AI.