AI Reasoning—What is It?

The Significant Implications of Test-Time Compute

I was tempted to start this article, and continue throughout, with quotes around “reasoning.” I’ve found the use of the terminology of “reasoning” frustrating because it easily misleads the lay public who have an existing understanding of what reasoning means.

Reasoning models do not have human reasoning—which, so far, is the only type of reasoning we have a conception of. Nathan Lambert has perhaps convinced me not to say that it isn’t reasoning at all. I also think it’s fair to point out that we still have a lot to learn about how humans reason, but insofar as we can tell, this process (called Chain of Thought or CoT) isn’t it.

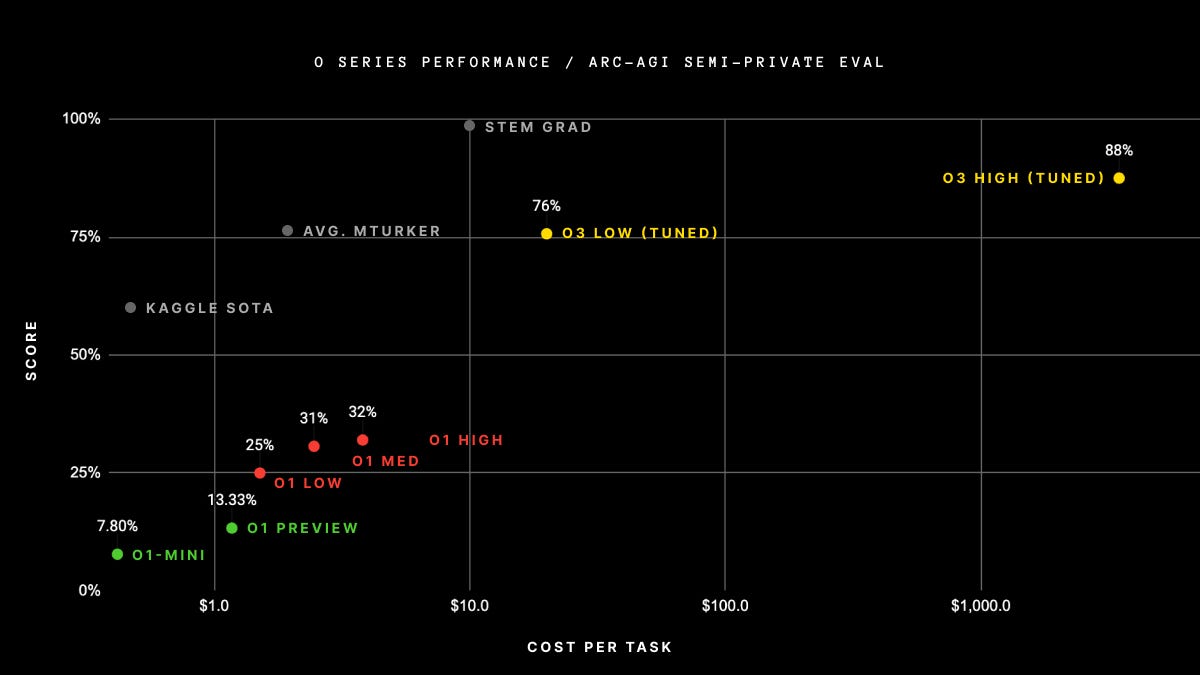

Even OpenAI’s o3, its latest reasoning model and the successor to o1 that came up earlier this year, which captured headlines with its breakthrough ARC-AGI scores (which, as Gary Marcus points out well, is not a test for AGI), still consistently fails at fairly simple problems that a human wouldn’t—even in ARC-AGI itself.

So what is it and does it matter at all?

Despite my distaste for the “reasoning” terminology, which extends to the weakening definition of AGI (for OpenAI, likely because their agreement used to state that once AGI was achieved, Microsoft would no longer be able to use their technology) and the casual tossing about of ASI (artificial super-intelligence), this is still a big deal.

Scales Capabilities: It continues our breakneck pace of improving AI—and puts to bed the narrative in 2024 that “AI scaling is over.”

Supercharges Resource Requirements: It vastly increases compute, cost, and power requirements (in a way, these are all the same thing on a variable cost level).

Creates a New Model Modality: Opens up even more differentiation in types of models, beyond the “base” models, small/specialized models, and now “reasoning” models

What is CoT exactly?

Chain of Thought is named well. In the 2022 paper from Google introducing the concept, the researchers describe how LLMs could substantially increase their performance with more verbose intermediate steps.

CoT is essentially LLMs talking themselves through problems. This, as the paper describes, is helpful because it

Substantially increases performance

Doesn’t require significant changes in model architectures (or, often, any)

Is auditable, since the intermediate output is human-readable

Of course, this also means that if you wanted to, you could replicate the results of these models by prompting the models this way yourself. The magic of these models is that it doesn’t require multiple human interventions to make it work.

Scaling Performance

The other way that CoT has been described is as a broader category of “test-time compute” or (interchangeably) “inference time compute.” Previously, we had the costs of models dictated by how large the models were (so upfront training). Performance, then, was dictated by how many GPUs you could throw at training a bigger model with more parameters and more data.

We still, of course, had costs from inference. Each answer, or more precisely, each token within an answer, still costs compute time (and money), but that could still be thought of as a “per answer” kind of cost.

Now, it doesn’t have to be. We can scale inference (at “test time” meaning while evaluating the model). Why? Because we have a huge number of tokens that are generated but never shown to the user. And you can generate a lot of these tokens. And you can keep doing it to improve performance.

This adds an all-new dimension to scaling. Instead of just more compute requirements upfront, you can now scale compute requirements while trying to solve problems.

The way this has been popularly described is that the models can “think” for longer before responding.

Of course, compute is cost.

Scaling Cost

As said, o1 and CoT reasoning was a fundamental shift because it added a whole new dimension of scaling. o3 just builds on it.

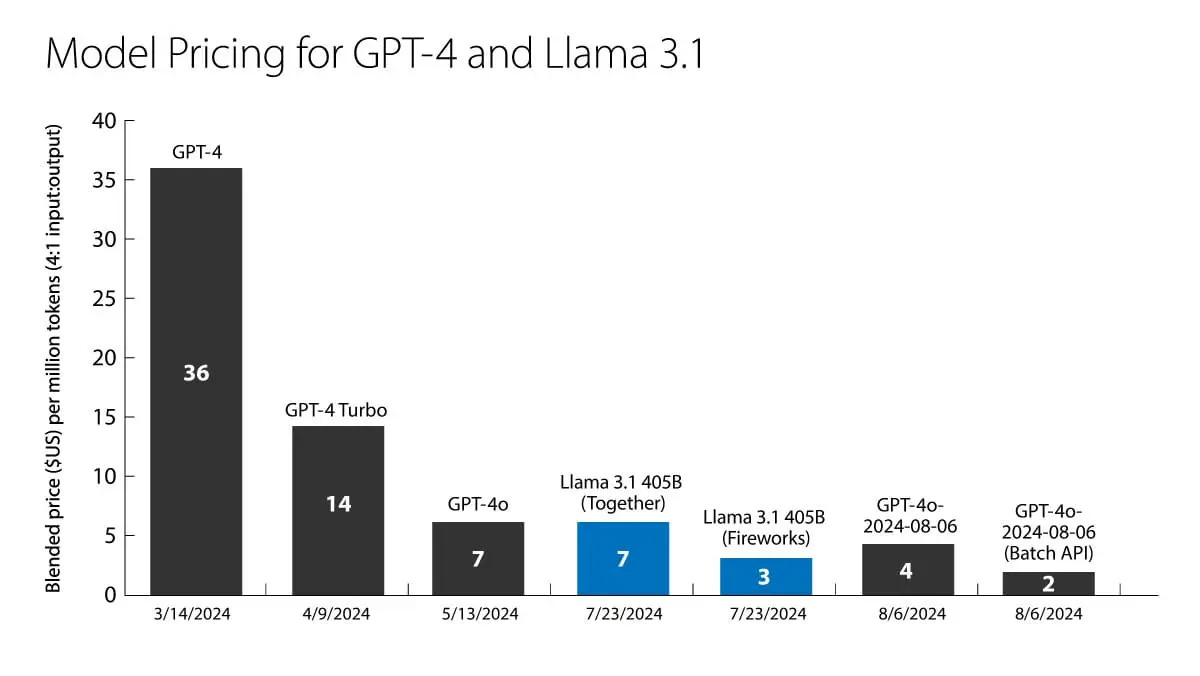

The cost and speed of tokens have already become a battleground between models and cloud providers of models. Competition usually drives efficiency, and this is no different. Better trained and quantized models, new techniques… while AI was still getting more expensive due to scale, answers were getting cheaper over time.

This new dimension blows the door off that. At least from the aspect of each answer getting cheaper. Now, inference (the answers) itself can get scaled up with these invisible-to-the-user tokens that don’t contribute to the answer, but are used to derive a better answer.

These chains can get long—and, thus, expensive. How expensive? Well, OpenAI shared about o3’s run data on the ARC-AGI benchmarks with a mind-boggling >$1,000 per query cost.

Of course, it’s an incredible result… at an incredible cost. I’d say it’s an open question what specific use cases would warrant this kind of massive price tag. Especially when OpenAI is still wrangling with the question of extracting value (i.e., subscriptions) from users of their existing models. After all, we saw the debut of $200/month ChatGPT Pro plans in December (which Sam Altman claims they’re losing money on) and fairly open speculation on rolling out $2,000/month enterprise plans.

Additionally, we also likely won’t see power demands die down anytime soon. —because, ultimately, most of compute’s variable cost (after you buy the hardware) is power. Even before much CoT reasoning became a huge conversation topic, we saw Microsoft announcing a deal to reopen Three Mile Island, and Meta put out a call for proposals to open a 4 GW nuclear plant.

It’s clear that we are seeing this kind of scale-out of “reasoning models,” and we won’t see demand for high-performance computing hardware—led by Nvidia’s hardware—die down anytime soon. Anyone who wants to stay on the frontier will need to buy more of them and probably has an even higher (than the sky-high already) appetite for powerful chips.

Jordan Schneider at ChinaTalk even suggests that the new Biden export controls are crafted in such a way that they look like administration officials are paying attention to reasoning model news. If so, and if these models are the key to some kind of massive geopolitical strategic advantage, it’s not a bad calculus. Compute requirements are only going way up for AI.

More Types of Models for Different Purposes

Everyone knows about the flagship LLM models already, including OpenAI’s ChatGPT, Anthropic’s Claude, etc.

However, not everyone knows that there are models for specific purposes—hundreds or even thousands of them. On Hugging Face, there are coding-specialized models, Instruct (instruction-following) models, and even roleplaying models.

Many of these are fine-tuned, distilled, or in some other way derived from the flagship models. But a lot of them are also smaller because they just don’t need as much of the vector space for areas outside their specialty. This allows them to also be embedded on far less powerful hardware, especially on the edge (i.e., a model that runs locally on your phone).

Reasoning opens up a whole new category.

While OpenAI has been leading the way with its o1 and o3 models, we are seeing models from other labs with this kind of test-time compute. Google’s Gemini 2.0 Flash Thinking Mode, Alibaba’s QwQ, and DeepSeek’s R1 are just a few. I’d expect to see more of these going forward into 2025 as all the major labs explore possibilities in this area.

Just last week, we saw a UC Berkeley lab release Sky-T1-32B-Preview which researchers claim is competitive with OpenAI’s o1-preview… trained with just $450.1

Limitations Still Exist—It Isn’t AGI

This form of reasoning is quite interesting. One of the fundamental problems I’ve described with LLMs in the past is that they give you answers from the “center of mass of the statistical distribution.”

If you think of a bell curve (… or if you prefer to be more accurate, a bell curve with billions of dimensions…), you can think of LLMs as generally picking a data point from it to replicate… but most points will come from the center (by definition).

Chain of Thought does something that good prompting already does with LLMs. As Marc Andreessen and Ben Horowitz describe, you can actually get better code from an LLM if you ask it to write “secure code.”

Why? Well, because you are no longer trying to ask the LLM to give you code from the center of the statistical distribution. You’re asking it to “go somewhere else” in its vector space. To be clear, it’ll go to that other spot and then give you the center of that distribution, but where you’ve ended up is a place with above-average code (say, StackOverflow answers from more experienced programmers who care more about security).

In a way, reasoning is asking the LLM to “wander” through its vector space to refine the answer with a more optimal place to draw from. However, just to be clear, it can’t wander out of its vector space entirely and come up with something new, versus finding a better spot in it.

As such, it’s still limited by sticking to being a statistical distribution—just one with a lot of power, having seen a ton of things. Which, don’t get me wrong, is quite valuable, but also isn’t either creativity (which it still doesn’t have) or human reason (which can follow logic chains into places that are entirely novel/new).

Can any AI learn?

This is ironic because all frontier models now extensively (and expensively—just see Scale.ai’s valuation) use reinforcement learning to train.

Why ironic? Well, reinforcement learning in its purer form (versus most of what is described as “agents” or “agentic AI” right now about LLMs) is a study of AI agents who can learn novel things from nothing.2 We can see this with a fun example of someone teaching an RL agent to play Pokémon Red from scratch.

Those agents explore, then exploit (literally a technical term chosen for alliteration to describe choosing learned optimal actions)—and, from this process, can learn to do something entirely new. It isn’t “creative” per se, but it certainly can use trial and error more than any human to learn from its experiences. Agentic AI (with LLMs) is trying to add some of this learning loop but is also in its early stages.

(Just to flag, though it’s fairly technical—Google Research released a paper on Dec 31, 2024 about “Titans: Learning to Memorize at Test Time” with LLMs that literally learn and incorporate into their neural network parts of the prompts/queries as they go).

Regardless, we’ll have to see where this all goes, but the future holds much more in the development of AI (though still with many of the limitations that already existed on LLMs still in force).

Special thanks to Nathan Lambert for answering some of my direct questions on this.

Thanks for reading!

I hope you enjoyed this article. If you’d like to learn more about AI’s past, present, and future in an easy to understand way, I’m working on a book titled What You Need to Know About AI that will be published in 2025.

Sign up below to get updates on the book development and release here!

For those of you who would say I’m biased for UC Berkeley because of my background—Go Bears and bite me.

Ok, not totally true. Reinforcement learning, to get pedantic, has states, actions, and rewards. States and actions are often more straightforward (but sometimes not), but rewards are often quite hard to craft and must be done well to have an effective agent.

Thanks for this clear, comprehensive article. It's very helpful.

I considered not liking this post because of the "Go Bears" footnote, but it was a great post and I can't deny that. So instead... Fear the Tree!