AI Winters and The Third Wave of Today

A sneak preview from my upcoming book "What You Need To Know About AI"

The pre-sale campaign for my book What You Need To Know About AI wraps up in a few days (Sunday February 9th). Before I dive into the phase where I make hundreds of edits (or more) and incorporate everything that has happened in AI in… *checks calendar* the last 2 months since I finished the first draft (which is a lot!), I’ll be sharing two more previews before the last day of the campaign.

The first preview is an excerpt from the beginning of the chapter AI Winters and The Third Wave of Today. I chose it as a preview because it blends more recent AI history (2010s) during one of the “low” periods of AI with my personal experience on-the-ground as a graduate student and entrepreneur working with and around different industry practitioners.

Also, in case you missed it, you can check out some of the previous interviews I’ve published that are used as part of background research for the book including:

Dr. Joshua Reicher and Dr. Michael Muelly, Co-Founders of IMVARIA, about the use of AI in clinical practice

Tomide Adesanmi, Co-Founder and CEO of CircuitMind, about the use of AI in electronic hardware design.

Alex Campbell, Founder and CEO of Rose.ai, about the use of AI in high finance.

If all this intrigues you and you’d like to pre-order a copy or share with someone who’d find this interesting, I’d really appreciate your support!

There are also exclusive benefits to pre-ordering now such as 1-on-1 ask anything about AI sessions, different book bundles (to share with friends or colleagues), and more.

You can sign up below to get updates on the book’s development and release here:

Now, on to the preview!

AI Winters and The Third Wave of Today

“So, are you learning all of this because you enjoy spending time on obsolete systems?”

- asked a programmer friend who will remain unnamed when he found out I was taking a class on knowledge-based AI for my graduate degree.

It’s hard for those who were not in and around AI pre-2010 (and even for a long time afterwards) to understand how deep the sense of disillusionment and failure was around the field, especially in professional circles. Not only, as we will discuss, did expert systems and LISP machines—the implementation of knowledge-based AI—fail, but by the late 1990s neural networks were still largely unworkable and dismissed as infeasible.

This left both branches of AI as failures.

I still remember during the time everyone, including me, took pains to avoid describing anything as AI (or even machine learning).

What a contrast to today, when absolutely anything and everything by big or small companies is dressed up as “AI.”

That being said, even by 2006, and accelerating as we moved into 2009 and 2012, excitement around neural networks had started to reignite. This didn’t really help sentiments in the broader tech and computer science community about knowledge-based AI however.

It was 2015 when my friend made the comment, while I was taking the course during my masters degree in computer science from Georgia Tech (part-time and remote). It was a somewhat unique position, as I had already come back to Silicon Valley from the east coast and the hedge fund industry, and was drinking from the firehose of hanging out around startups in San Francisco and within both the UC Berkeley and Stanford ecosystems.

As such, I was “in industry,” while I was a student, and had a peanut gallery of experienced tech veterans and founders.

It’s noteworthy that even my most “academic” courses of heavy math theory—which I decided to take after my experience during my MBA of taking PhD seminars in machine learning in the UC Berkeley CS and Statistics departments as my electives (... none of the rules forbid it, technically, and no one else seemed insane enough to do it in my class years)—elicited this type of comment. Most were impressed that I was burning away my nights and weekends on bringing myself up to speed. There were glimmers of our Third Wave boom in deep learning percolating in the background. Knowledge-based AI was something else, though.

It’s an indication as to how deep the disillusionment, and, in a way, embarrassment went from the aftermath of the Second Wave of AI.

When Were the AI Winters?

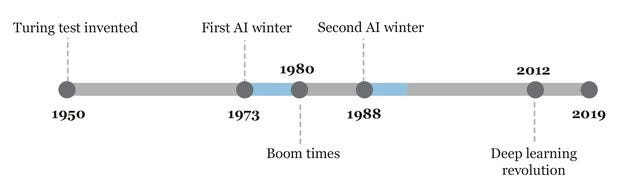

There is no objective definition of AI winter. However, most agree there were two AI Winters in rough windows following the two Waves.

The first was the fall of “connectionism,” which happened somewhere in the vicinity of the years following the publication of Minksy’s Perceptrons. Some place this as stemming from the Lighthill report in 1973 that frankly discussed how the promises of AI were overstated, especially in machine translation.

This was soon followed in the 1980s by the boom in knowledge-based AI, and then subsequent bust. National Conference on Artificial Intelligence (AAAI) from dropped from over 5,000 attendees in 1986 to less than 2,000 in 1991 and finally to only around 1,000 or less from 1995 onward.

During these winters, government funding, private investment, and researcher interest significantly dropped off. Additionally, as said, they generated hangovers of embarrassment over the field. This, of course, is now what some experts like Gary Marcus are worried about following our current AI boom.

However, we should give knowledge-based AI it’s due in how it worked and why it failed—which sets up an excellent contrast for the Third Wave, the return of connectionism… but different.

Expert Systems

So, what is an expert system, and why is knowledge (and symbolic logic) so integral to them?

As one industry presentation in 1985 described it, “The key factors that underly [sic] knowledge-based systems are knowledge acquisition, knowledge representation, and the application of large bodies of knowledge to the particular problem domain in which the knowledge-based system operates.” Furthermore, the presentation continues: “don’t tell the program what to do, tell it what to know… The task is elucidating and debugging knowledge, not writing and debugging a program.”

This is key. Knowledge is what fundamentally defines a knowledge-based system, which is also what makes it so different from systems today.

The worry that often comes up with LLMs. “Hallucinations”: false knowledge that is confidently served by up LLMs, in the exact same way as true knowledge. Infamously, some lawyers who attempted to use ChatGPT to do their legal briefs found themselves sanctioned because many of the court cases they cited were not real—but sounded real, until the court looked into them.

As we will discuss shortly, this is because neural networks are, in a way, the opposite: they don’t actually have embedded knowledge.

This kind of hallucination can never happen with a knowledge-based AI. They are transparent (due to their use of logic) and always use knowledge built into them (not made up) to come to their conclusions. Obviously, this sounds great. What went wrong?

Let’s take an example of one of these systems.

MYCIN

Although there are quite a few different expert systems that emerged in various fields, the most famous was probably MYCIN. MYCIN was used for the diagnosis of infectious disease and had a particular speciality in bacterial infections of the blood. Since it really demonstrated what was possible, it was widely studied and imitated.

A chat with MYCIN would be far more rigid than what one would expect of a modern LLM like ChaptGPT. It would ask details about the patient, when samples were taken, and ask clarifying questions. However, in terms of responses, it wouldn’t seem that far off from what could be a ChatGPT conversation.

But underneath, it’s completely different.

MYCIN translates every word of English into a specific data format for itself under the hood where it uses it to match against its pre-programmed and populated knowledge.

How specific expert systems did this differed, though MYCIN used rules and a context tree. It specifically needed to know what was an object, attribute, value, and level of certainty—basically, building its own grammar and syntactic understanding of what was being provided to it.

This also means it’s completely auditable by any human who wants to peek under the curtains and make sure it’s working correctly. As said, transparent and always uses the knowledge built into it—thus, reliable.

What is the weakness of being defined—or confined—to knowledge? Well, what happens when MYCIN or a similar system comes across something that isn’t explicitly programmed in?

Now, let’s be clear. MYCIN and other expert systems are not totally rigid—there are systems of logic and inferences to help it draw conclusions, almost like a math proof—but it is quite rigid to novel situations that rhyme but do not closely match. This is a widely acknowledged weakness of expert systems even at the time—they cannot deeply reason or learn from mistakes, and both make their use quite narrow in scope.

This means that if MYCIN comes across a case that it cannot definitively work with using its logic (even fuzzy logic) and knowledge, it cannot proceed. Contradictory symptoms? This can bring the system to a halt. In a way, this is great. The system “knows” (by not having enough information) when it should not try to guess, which is what you would want in critical scenarios like medical diagnosis.

In practice, it also means you will, in any real world environment, constantly run into new situations that can only be handled by expensive additions of knowledge (by human experts) to its meticulously maintained database. This works if the number of variations are finite, like many of the toy problems used to prove out the concept of symbolic or knowledge-based AI. It is horrible when—as it is the case with most places we rely deeply on experts—things are much more complicated.

This makes MYCIN, and similar systems, fairly confined and of limited use. While they are more sophisticated, one can analogize them to lookup tables. Or, in less technical parlance, knowledge-based AI looks like a student who memorizes facts, as opposed to a neural network, which looks like a student who forgets specific facts but remembers high-level concepts (... and sometimes makes up facts).

Ultimately, this is what made expert systems (and other similar systems) impractical and killed off industry interest in them—and AI—for decades. Effectively, until now, in our Third Wave of AI.

Thanks for reading!

If you enjoyed this passage, check out the book and consider pre-ordering or spreading the word. Either way, I’d really appreciate your support!

Sign up below to get updates on the book’s development and release here!