Let’s Talk About AI Compute

What really matters and why AI computing is exactly the same as all other large-scale computing

There’s a lot of talk these days about AI compute. In fact, I talked about it myself, in mentioning that people are overestimating the importance of AI compute as a bottleneck. Now, despite my assertion that it isn’t as critical as some people say, it doesn’t mean compute doesn’t matter. After all, it is what AI runs on. Additionally, since there isn’t actually much defensibility in AI algorithms themselves, compute is a “lever” for certain companies to maintain an advantage. It’s just not as absolute a “lever” or barrier-to-entry as certain breathless commentators assert.

Part of this conversation often veers into designing AI ASICs (application-specific integrated circuits). Basically, single-purpose chips that run specific AI-related computations faster and/or more efficiently than more general purpose CPUs and GPUs. Google/Alphabet, Facebook/Meta, and Apple have all created their own version of these. But most commentators are missing the point.

You can have a specific application in AI be common enough to embed it in hardware (think about voice assistants who might have an AI chip to just do voice interpretation, or an AI chip to specifically run, say, FaceID). This is why these big companies know what kind of ASICs they want to make.

However, when we talk about the vast potential of AI, we generally are not talking about constant, “mundane” uses of AI that become so routine that we forget they even have amazing technology underneath it (say, FaceID). They almost become generic utilities.

When we talk about AI’s vast potential, we’re generally talking about stuff like ChatGPT, which comes along and works really well, but may be used in different applications and have different structures in the future. And it works fine on GPUs. This is because the way most of these models like LLMs (what sits underneath ChatGPT, Bard, LLaMa) evolved was directly influenced by the hardware constraints we had... and still have.

To understand what real AI compute bottlenecks are, we have to actually go all the way back to fundamentally how computers work.

Note: this is a much more “technical” post than some of my past ones. However, I think it’s important to build intuition around how AI actually uses computation. I’m not going to go super deep into details, or AI algorithms, but I will be covering how computers work, why AI has to be distributed, and the challenges that creates.

You can’t just use a bigger computer

In the early days of the internet, if your website got too much traffic (meaning, too many visitors to serve... which means “too little computer to serve”) you had a choice. Scale vertically. Or scale horizontally.

“Vertically” meant just getting a bigger computer. This is almost always the easier path and is also what a lot of software developers got used to with the inevitable march of Moore’s law. Computers were just getting “bigger” in computing power (even if their physical size shrunk) every single year. Memory and storage also kept growing.

Moore’s Law was that transistor density would double every year (later amended to 18 months), which meant that computing power would follow. Although people will argue with you—meaning, they argue with me—that Moore’s law still applies, the strictest version has been dead for a while. It was really getting stretched to the limit in the mid-2000s already. Once we hit atomic scale and quantum effects started messing things up, we couldn’t really strictly double the number of transistors in the same area. The computing industry is finding ways around it, but since computers themselves couldn’t strictly get faster, more parallel processors (and other “parallel” uses of transistors) started being used to do “more work.”

But a single faster worker is not the same as two workers. Even in a human context, people can’t necessarily all be doing the same task at once, and you’re going to hit bottlenecks, say if you’re trying to assemble a lot of burgers. Coordination slows things down. The fact that multiple workers aren’t a hive mind sharing a single brain is an issue (there’s even an entire book written by an IBM computer scientist—about people—on this very point).

This finally brings us to scaling horizontally and rewinding back to the mid-1990s.

The other way you could scale is by getting more computers. But similar to the workers making burgers... and similar to our poor computers that themselves have generally stopped getting that much faster and have mostly gotten more parallel power... it’s not the same as just a bigger, better computer.

What if someone logged onto your website on one of your servers (computers), and then suddenly got served by another one? How would you keep track? How do you split up the work? Just like if you have to go from one doctor/customer service representative/wait staff to another, you have loss from coordination and need some way to “get everyone in sync” that itself is costly in time, computers are the same way.

Versus just, “one worker does everything,” many workers working creates a huge amount of overhead.

Now you understand why training AI is a pain. Because you’re not going to be training ChatGPT (which reportedly cost millions of dollars to train, and supposedly, 10,000 GPUs) on “one big computer.” You have to use a lot of them, and take the attendant losses from coordination and communication.

This is annoying not only because it’s more costly in certain ways, but you need to create entirely new processes and ways of working. Just like how if you have a company vs. just doing stuff yourself, you suddenly need team meetings, managers, etc.

AI’s true challenge has always been every scaling tech company’s challenge—distributed computing

Notice that in my 1990s server example, I said that you “used to” have the choice between vertical and horizontal. If you’re a tiny website, you still do. If you’re a big website, you absolutely do not. It’s all horizontal.

Aside from cost, computers just hit a physical limit and don’t “get bigger” (in whatever way you want to use the term). Big computers (supercomputers) get exponentially more expensive the bigger they get, and even if you wanted to spare no expense, you will still hit a hard wall on how powerful one can get.

Eventually, at scale, you’ll always need more parallelism and will have to eat the cost of distributed computing.

This isn’t new. It’s really the exact same challenge that Google, Meta (Facebook), Amazon, Microsoft, and everyone else faced when they were scaling. It’s not really a coincidence that the best companies in AI are the exact same list.

What is the problem with distributed computing?

I’ve already repeated this a few times. If you don’t have one single Superman (or maybe The Flash) making burgers and instead have to rely on multiple mere mortal workers, you suddenly need to spend time, money, and energy creating processes to manage the distribution of work.

It’s the same with computers and any big enough problem: it’s annoying to do parallel computing (even if it’s on just one computer) and made worse in the specific case of distributed computing (which is across more distances and usually implies a lot of computers).

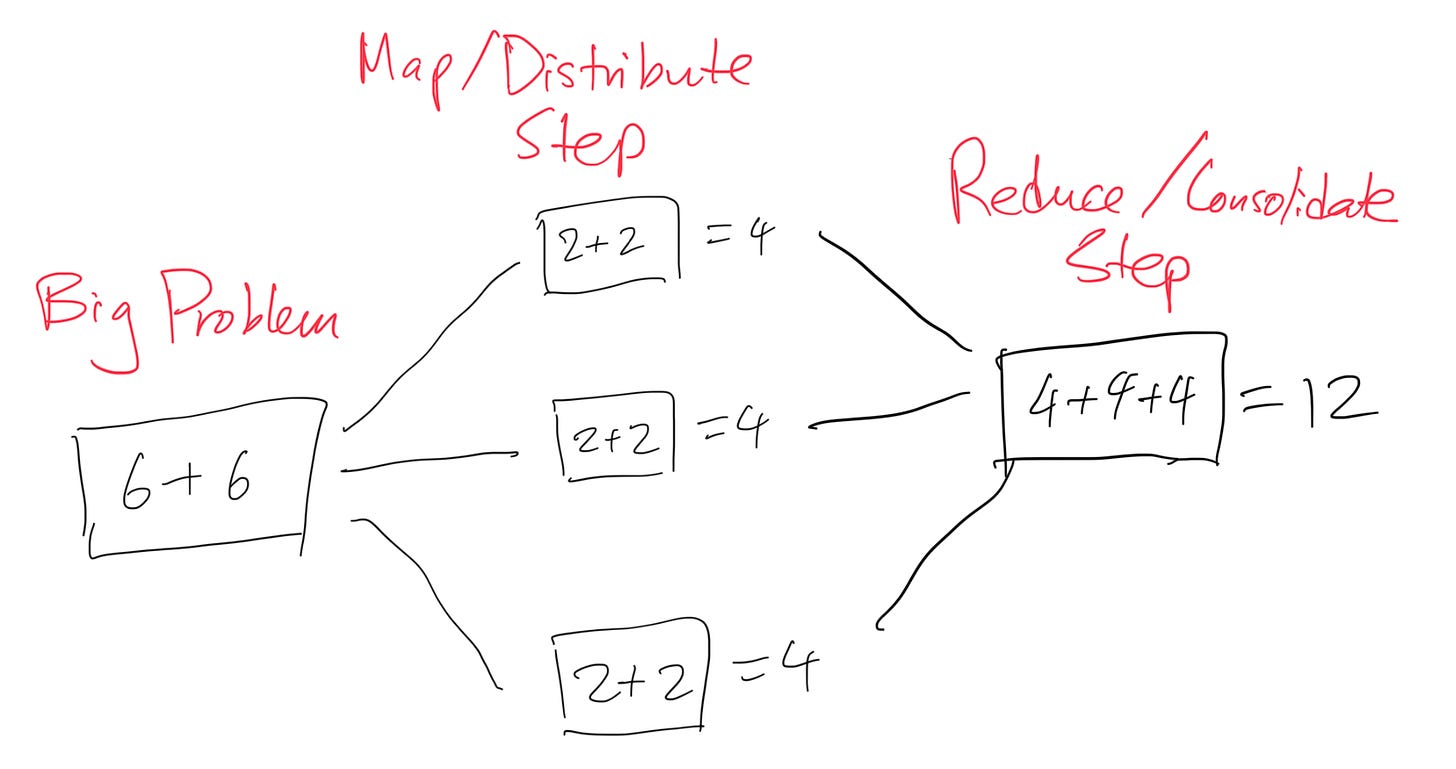

If you need to start splitting things up, whether it’s server requests or AI training data, you need to start figuring out how to do the splitting and ideally in a way that minimizes the amount of downtime for any single worker. This is where Hadoop/Google/actually-a lot-of-people’s famous concept of MapReduce comes from. It isn’t the only framework, but it’s a useful way to conceptualize a lot of these problems.

Map by distributing a single problem into a bunch of smaller problems, and then Reduce to collect the smaller problems back together into the solution of the big problem.

In a super trivial example, because addition can be factored out and has commutative/associative/distributive properties, you can turn 6 + 6 into (2 + 2 + 2) + (2 + 2 + 2) or (2 + 2) + (2 + 2) + (2 + 2).

Let’s just say that you don’t have a machine that can handle bigger than 2 + 2 (and only two numbers). You can instead, again, factor 6 + 6 into the three smaller (2 + 2) problems and give it to three machines to calculate (2 + 2) = 4.

Then, those three machines come together and give you 4 + 4 + 4. Now, hopefully, you have something at the next step that can add these three 4s together to Reduce the problem to 12.

It’s kind of a weird example, but an easy one to see how you can take one big problem, break it into small problems, and then gather the small problems to solve the big one.

However, you can also start to see where the trouble comes in. What if one of the machines takes longer? Well, the problem will slow down by as much as the slowdown of the single slow machine—because everyone else has to wait for the final (2 + 2) problem to finish before moving to the next step. If the machine errors out or dies (as they often do) you actually have to redo that entire part of the problem on a new machine.

We also factored this problem well. What if you didn’t? Suddenly, you’ll have some machines working way more than others, and again have to coordinate.

In this example, I also waved away gathering all of the sub-problems. What if we didn’t just magically get 4 + 4 + 4? What if we actually had to really transmit the data over physical wires (you know, like in the real world)? Suddenly, the distance of those machines from one another, the speed of the wires, and everything else makes a huge difference in how long this will all take.

A side note on parallelism

The examples I give here are deliberately simple ones. The individual problems don’t have dependencies on each other, and the problem can be neatly split up. In industry and academia, this is known as “embarrassingly parallel.”

One of the biggest pains of modern programming and computing is Moore’s Law’s ending and CPUs not just getting faster generation-by-generation (in a way, automatically granting you a “bigger” computer by Intel and AMD’s grace). Instead, CPUs become more parallel (anyone remember when you had the Core Duo, then Quad Core, and Apple Silicon’s M1 with 8 cores). Most programming tasks are not nicely embarrassingly parallel, which means that you don’t magically have your existing code run faster on the new CPU.

We can put this aside in this case, because necessity forced us to make as many AI tasks embarrassingly parallel as possible. Some of these were intellectual triumphs like parallelizing backpropagation (which was necessary for enabling neural network training) that is a bit of shame to just “wave away,” but that’s too technical for this particular overview. The point is statistical learning/ML/AI (as a field) expended huge efforts to ensure that the way we run AIs looks this way.

In fact, ChatGPT was enabled by LLMs, which was enabled by transformers... which, among its important characteristics, was that it finally allowed for much more parallel scaling of language models. Thatmore than anything else, enabled ChatGPT, just like convolutional neural networks (related, which also parallelized and made more efficient image representations), also made image recognition tasks finally viable through allowing easy parallelization.

Parallelism is embedded in our AI algorithms and that’s why we use GPUs

So far, I’ve just assumed a machine is a machine. But, of course, it isn’t. It’s CPUs, GPUs, memory... and architectures and a ton of other things.

But in terms of things that matter, one thing to point out is CPUs are single fast workers. GPUs are actually legions of slower (but many, many more) workers.

This is really the reason why modern GPUs (and specifically NVIDIA GPUs, which use a programming framework called CUDA) are so critical in AI.

(As for the importance of being able to actually efficiently program with parallel code on GPUs, there is a reason why Chinese companies are hoarding NVIDIA GPUs—and not AMD GPUs. I explain why and the importance of CUDA a bit on ChinaTalk with Jordan Schneider. As said, parallel computing has been a huge pain for the software industry. It isn’t a small thing to have the only well-optimized and accepted parallel coding framework/API for your GPUs.)

Anyway, you can’t make the computer bigger, so the inherent model and problem need to be splittable. If it’s already splittable, it can be split into even smaller pieces. And if that’s true, then a GPU will be able to tackle the problem more efficiently than a CPU. So, you may be computing in a distributed fashion across multiple computers, but each computer will also benefit from using a GPU to split the problem instead of a CPU which doesn’t.

It’s parallelism all the way down... and up, in terms of multiple computers, but also multiple data centers.

Messy reality matters

In our idealized examples, we don’t need to worry about fiber optic cables being cut, certain wires being frayed and slow, certain data centers being inaccessible, faults in individual computers, etc.

In fact, most tech startups, and even AI companies generally don’t need to worry about this. This is the province of the modern cloud provider like AWS or Azure. But the limits of the cloud providers are also the limits of the AI companies.

Ultimately, if you think about it, the hardware will matter and will bottleneck certain operations (again, I never denied this in my piece about how compute isn’t the most relevant bottleneck). But certain hardware is much more important than others. Without getting into confusing, specific numbers, just think: what do you think takes longer—calculating a value on a single computer, or transmitting the value?

Well, that depends a lot on how complicated the problem is. At some point, though, if you break down the problem enough (like we need to in “scaling horizontally”), the limit of what we can do is suddenly dictated by how fast the value is transmitted to either the next part of the process or to the “Reduce” step to bring the problem back together.

Horizontal speed, based on how you degrade a problem, is going to end up being limited to a significant degree (if you can break down the problem) by the speed of communication. This basically is why there’s been so much money that data centers have spent on GPU, computer, and data center interconnections. It ends up being a huge bottleneck. A faster computer (or, in reality, “bigger” GPU) is nice, but if you had access to enough computers, the thing that really hurts you in scaling horizontally is how fast the communication can be, and how many coordination problems you end up with.

Parallelism all the way down

I talk about this problem mainly on the scale of computers since it’s easier to grasp. However, I’ll now point out that this picture doesn’t just apply to computers and data centers being connected together.

Some of the massive leaps in computing speed in both CPUs and GPUs have been locality of memory. Literally, one of the reasons why some later CPUs and GPUs are so much faster than prior ones is that engineers have able to architect closer and larger caches of memory to the computing units. So, this means, instead of needing to send certain things to RAM (random access memory—other memory that your computer has, and has much more of than cache memory), the CPU or GPU can just very quickly send to the local memory and get it back. We’re at the point where the speed of light is a huge issue and being closer by the computing units matters.

This is, by the way, why Apple Silicon seems so incredible next to everyone else’s computing chips. They stuffed everything together, and because Apple is Apple’s only customer here, they could easily just architect everything to be the shortest distance with absolutely no regard to needing to work with or be compatible with anything else. It’s a matter of locality.

Now, think about it—if locality for computing literally matters so much that something next to the computing unit vs being on the same motherboard (for those who don’t know what that means, basically the same board in the same computer) makes an order of magnitude difference of speed... what do you think a slower connection between computers will cause? God forbid, what do you think slower connections between data centers will cause if your problem is big enough to require utilizing multiple of them?

The problems are the same as they’ve always been in scaled, distributed computing

I emphasize interconnects here a lot, but ultimately, especially if you note that the problem goes all the way down to each individual integrated circuit, what we’re dealing with is not just “interconnects” but fundamentally just the same problems that “big computing” have always had.

It’s useful to remember this and not get caught up in the hype. There’s a reason why the companies that are so good at AI are who they are—it’s the same problems when we’re dealing with the hardware.

That should give one a clue as to where the opportunities are or are not. If nothing else, one should ask, if the problems truly are the same, do you really think that the true breakout startup opportunities are going to be in places where there are already players who are masters at solving those problems (and need scale to do it)? Or is it going to be somewhere else entirely—somewhere, perhaps, where the application of AI can be protected while the hardware, models, and even “generic” web data are commoditized.