Models Are Not Exponentially Growing in Size

Stating the Obvious (for AI Experts) That's Non-Obvious To Everyone Else

Straddling the worlds of AI researchers/analysts and “normies” is odd sometimes. That perspective and bridging the two worlds is the premise for why I wrote my recent book. Even so, it’s surprising to me when something is so obvious to those following AI—such that it doesn’t even need to be said—but is either totally unknown or is completely misunderstood in the broader population.

This, of course, is the curse of knowledge, where important things are totally left unsaid... because everyone already knows, right? This leaves a lot of the public with a lot of misconceptions.

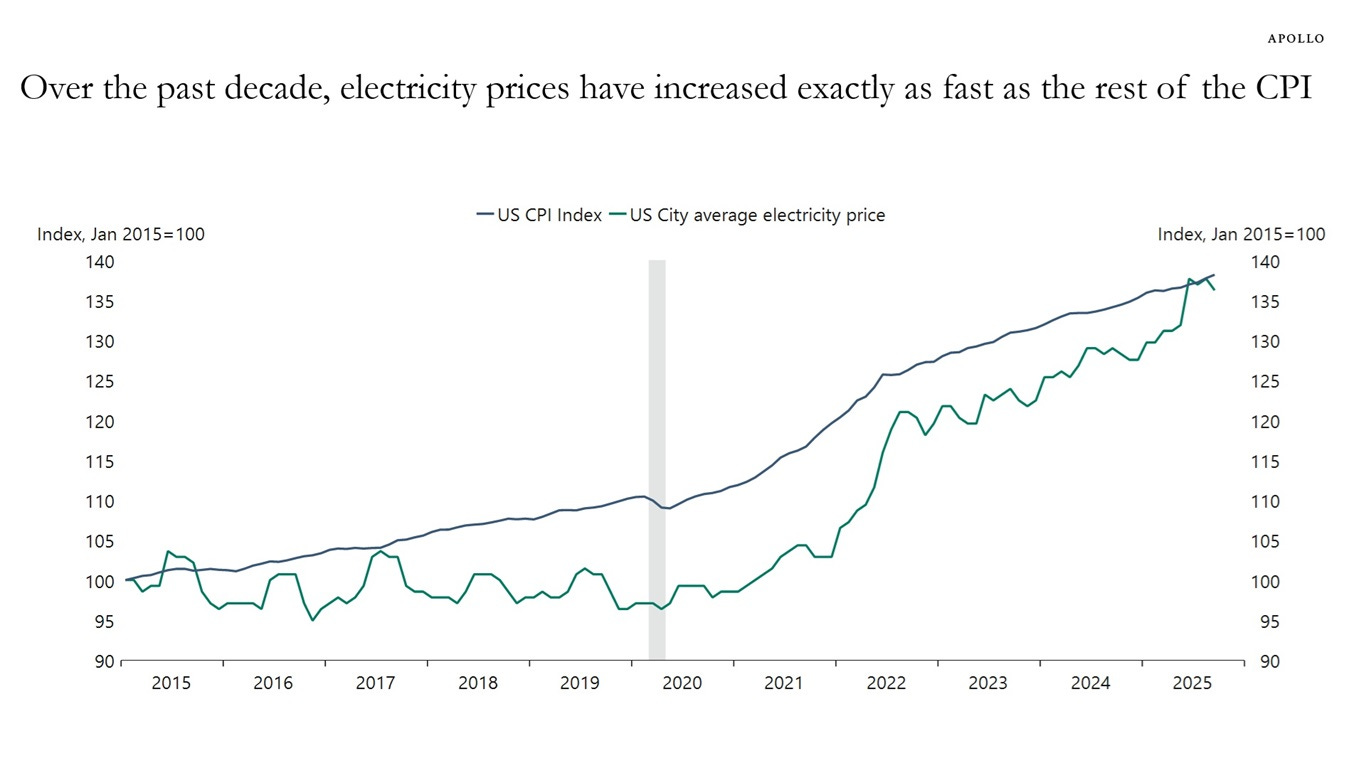

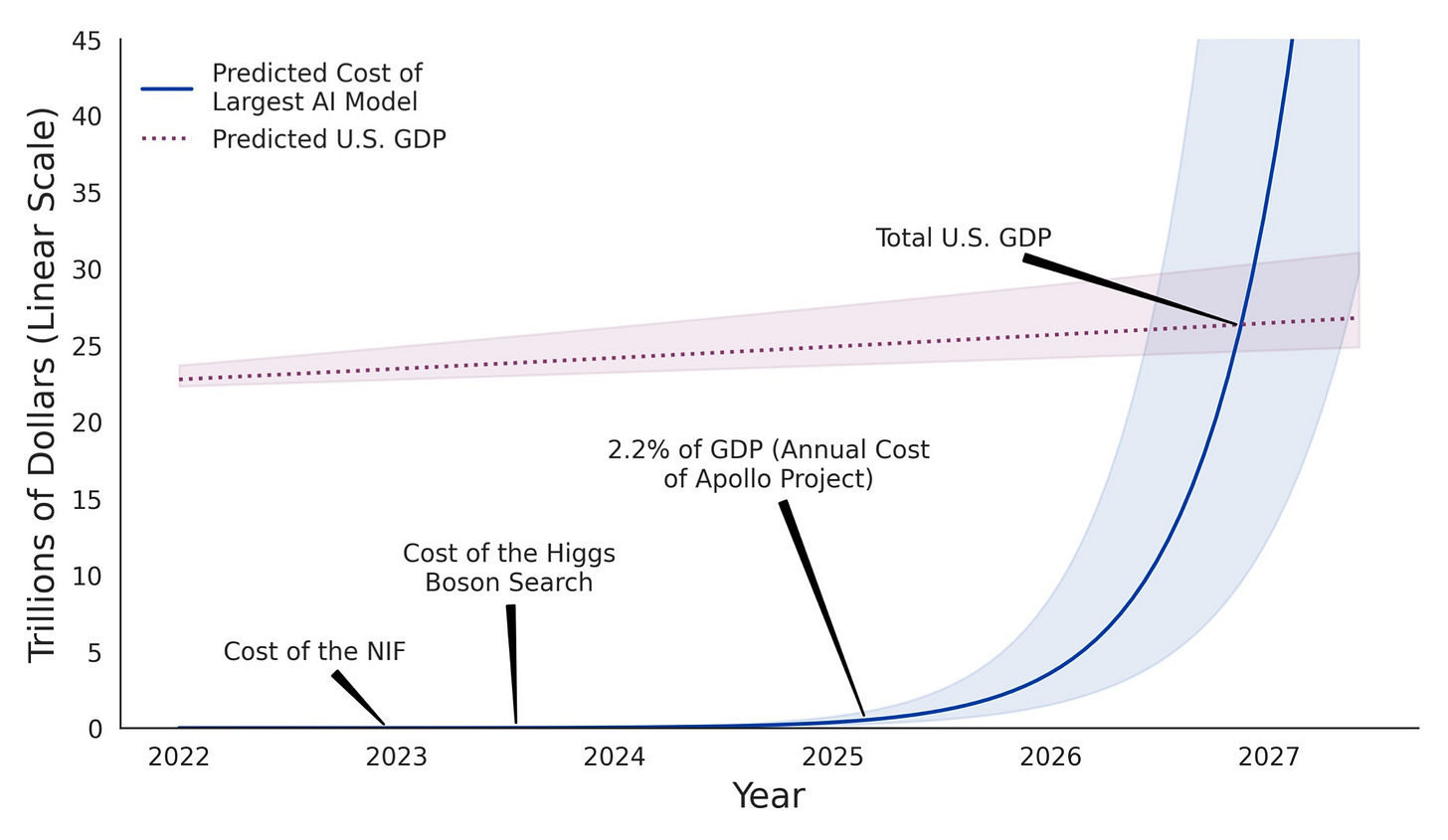

In 2022, the Center for Emerging Technology and Security (CSET) projected that by 2030-2040, AI model costs would exceed total US GDP. A few months ago people were freaking out about AI consuming all of the world’s water. A few days ago, Apollo provocatively stated that the AI energy gap “will not be closed in our lifetime.”

This, of course, makes total sense, because AI is just consuming everything and becoming infinitely bigger… right?

If you project growth from the beginning of any exponential curve, it inevitably looks bonkers in a relatively short period of time.

At the beginning of the deep learning and LLM boom, especially 2020-2023, it sure looked like we were going to be on the wild ride projected out by CSET.

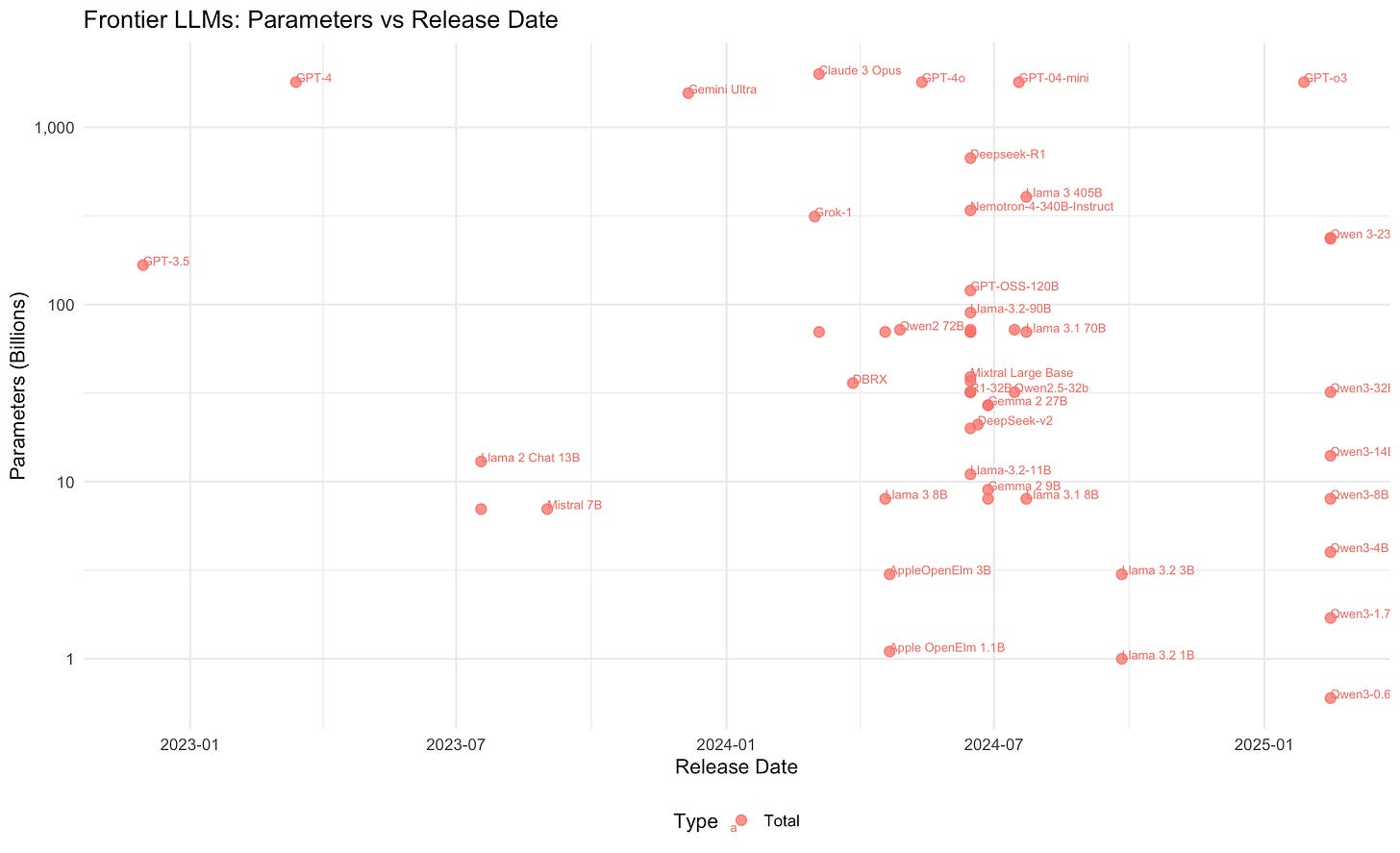

That turned out to not be the case—so much so that it’s actually extremely hard to find a good chart of model parameter sizes. Part of that was because since 2023, we have Mixture of Experts (MoE) models—which many major models now utilize—that make apples-to-apples comparisons hard. That means that traditional “dense” models vs. MoE models, which only activate certain portions of their parameters for certain problems, are hard to directly compare. Additionally, many model providers, like OpenAI, explicitly stopped publishing the sizes of their models.

Even with all of these caveats, it’s fairly clear that parameter counts have leveled off in the past two years based on publicly disclosed (and estimated) data. But why?

Why They Stopped Growing (As Fast, For Now)

Simply said: performance and cost.

Just scaling up parameters doesn’t directly give us greater performance. As folks like Nathan Lambert have pointed out, performance of models has more recently been driven by post-training. It’s not the same as “fine-tuning” (which is something different), but it is similarly taking models and applying various techniques to improve them instead of just making them bigger/consume more data.

That has helped keep the cost of models high (because post-training can be expensive and is often actually human-labor intensive, like with RLHF—reinforcement learning with human feedback, which literally feeds in human feedback to models). Additionally, post-training can also involve a lot of compute, so a “compute curve” or cost curve might not see leveling off in the same way.

Nevertheless, high cost or not, that’s still a form of improvement that isn’t just “make it bigger” by raw model size. We have clearly seen models plateau quite a bit in performance… relative to model size. Again, this doesn’t mean we’ve “hit a wall” in performance. It just means that improving it isn’t as easy as naively scaling everything up. In some cases, it might even go the opposite direction, as Devansh covered with a 7M parameter model (absolutely petite versus billions or even a trillion parameters) beating some leading models. Clever architecture/models, better post-training, and more experimentation give a bigger bang for the buck than just literal “bigger is better.”

(A separate piece I’ll likely write later is why “all AI will be agentic”—part of “scaling” performance will simply be doing the work to make AI more practically applicable to productivity. As in, make AI do stuff directly, instead of just chat. That’s already happening with the “agentic AI” boom, which will likely get dropped to just be “AI” soon. In any case, there are many axes for improving performance that aren’t pure size and thus don’t have the same implications for resource consumption.)

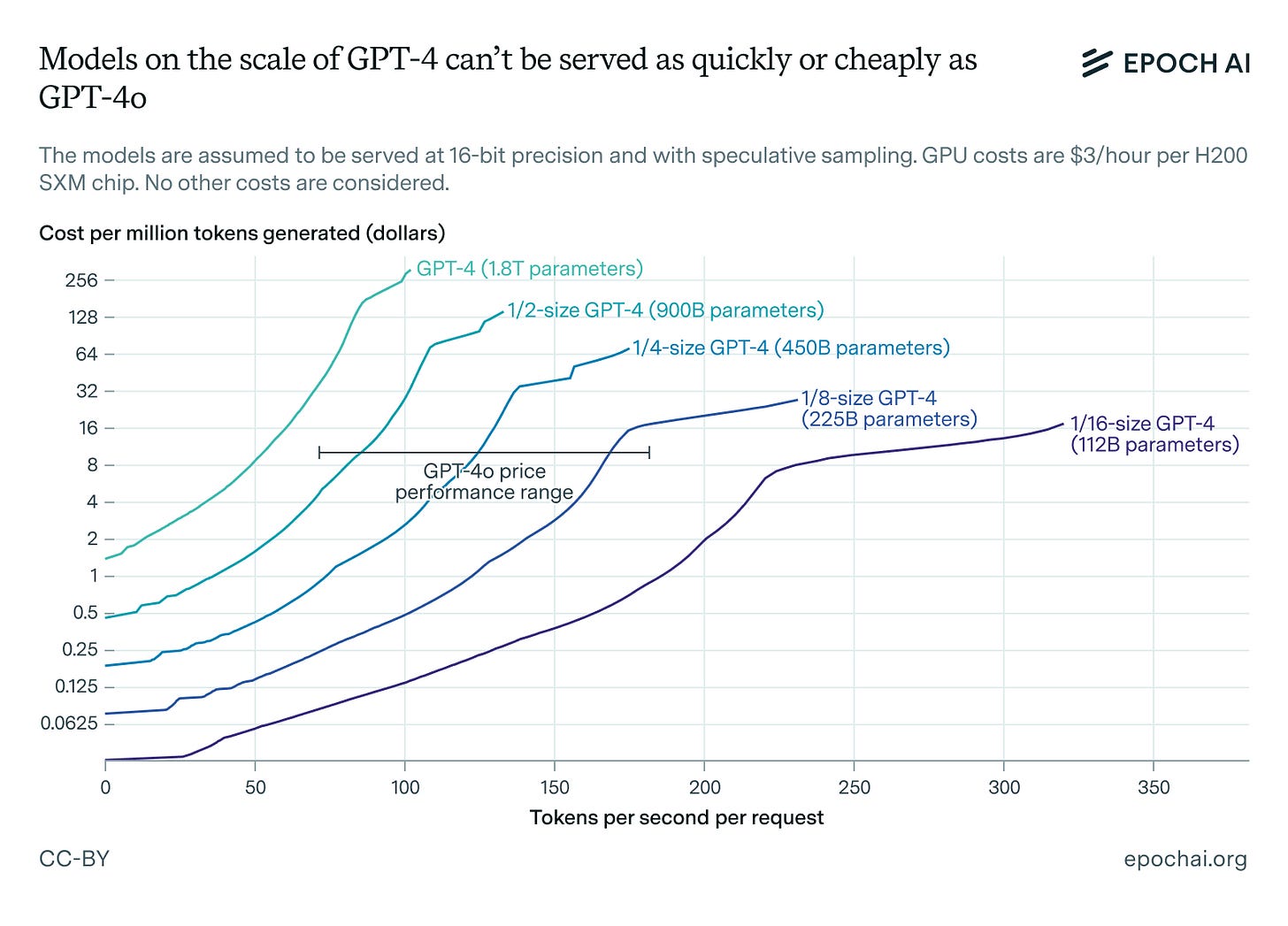

The other, related reason is that as “pure scaling” has gotten less effective, it’s made it less and less economically viable to approach things that way. This is because models get more expensive to serve (inference) as they get bigger. In other words, a bigger model = a (generally) higher cost of inference.

(Obviously, training costs also increase—and by a lot—but inference costs scale faster with usage, because they apply to every chat/query.)

This is partly why GPT-5 for OpenAI has a built-in router that decides which model it wants to send queries to. Even including that intermediate layer, OpenAI can save on costs by sending easy queries to a smaller model.

As such, why bother scaling up a model when training costs exponentially balloon, inference costs are higher (even if they’re dropping rapidly with improving hardware), and you don’t really get many benefits from it?

You wouldn’t. And, mostly, model companies haven’t.

This won’t necessarily hold forever—as we get improved technology with interconnects, faster/better memory, and other things that make training cheaper, the economics could become reasonable again.

Basically, even if we do have fairly marginal performance gains, the cost curve could bend down enough later that it’s worth it just to make models bigger again. Years ago, after all, having 1 MB of system memory was huge (and extremely expensive). Nowadays, you browsing this webpage probably consumes more than that.

Mistaken Assumptions

Those of you closely following AI likely have been fairly bored by this piece so far. After all, all of this is so obvious… and has been so for years, which is an eternity in AI development.

AI isn’t scaling the way normies think it is. Additionally, however, the power grid has a lot more ability to scale than most AI experts think.

After all, similar to how most people aren’t AI experts so don’t understand these “obvious truths” about AI scaling, AI experts aren’t experts on the power grid… despite everyone moonlighting as one now.

The Real World is S-Curves

A base assumption that one should take in the real world is exponential curves never continue.

The world’s population growth leveled off. Carbon emissions did not grow like we feared they would in the early aughts. Even “peak oil,” where oil prices would infinitely skyrocket (which all the headlines blared in my youth), was totally wrong.

This is related to my piece on shortages. Ecosystems in the real world adapt. All exponential curves are actually S-curves—you just can’t necessarily see it in the exponential portion of it.

And long before we hit literal limits, ecosystems adapt. Resources get scarcer (in ecology). Price mechanisms (in markets) also send adaptation signals.

In any case, because this is an S-curve, people who are projecting out infinite growth (or infinite earnings in the market) are probably wrong. This also applies to the power situation—are people really thinking correctly about it? Are we really not going to be able to meet AI power demand in our lifetime, like Apollo says?

We should assume no—even just by history.

In fact, all of the apocalyptic articles about AI sending power prices skyrocketing already… don’t actually reflect the data. Inflation has risen, so everything has gotten more expensive, faster—but we haven’t seen power, in particular, skyrocket.

Less breathless and data-free takes generally say it will skyrocket (even if it hasn’t yet)… But will it, actually?

Not to leave this on a cliffhanger, but there’s pretty good evidence, beyond just S-curves, that it won’t be that bad. We’ll have a special guest post a bit later on this topic—from an energy expert. For now, this is set up for why there’s a misconception from the general population side about AI. The next post will talk about why there’s a misconception from the general population (and AI experts) about power.

Thanks for reading!

I hope you enjoyed this article. If you’d like to learn more about AI’s past, present, and future in an easy to understand way, I’ve published a book titled What You Need to Know About AI.

You can learn more and order it on Amazon, Barnes and Noble, or Bookshop (indie booksellers).