The Boring Phase of AI

Advancements Will Become Less Visible—But More Impactful

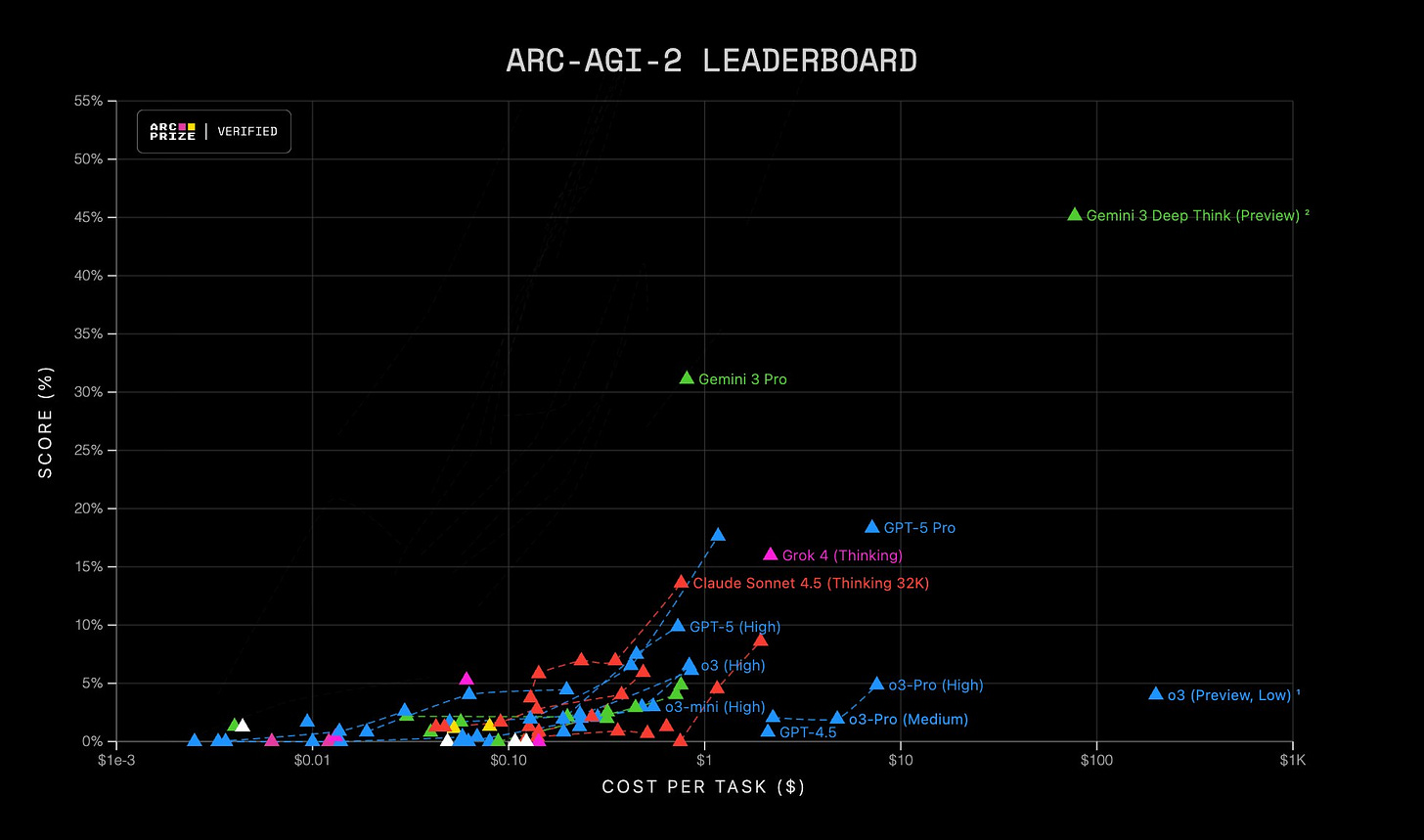

Gemini 3 caused Sam Altman at OpenAI to declare a “Code Red.” This is likely the most significant model release ever, with off-the-charts benchmark performance—until at least the next model release.

While commentators liberally spill ink about how important this is and cover the juicy drama (Sam Altman is world-class at generating PR), Gemini 3’s real-world impact is fairly muted. It doesn’t suddenly one-shot complex coding problems. Its ability to do “Deep Research” is not night-and-day from GPT-5.1 (… it actually hallucinates much more than OpenAI’s offerings). It’s better at benchmarks—for whatever that’s worth—but while it maybe feels somewhat better, it certainly doesn’t feel like a revolution in day-to-day use.

It’s likely most releases from this point onwards will feel this way, punctuated by the occasional breakthrough that will quickly diffuse to the other model companies/labs. This was an article I was already planning to write even before Gemini 3’s release. And its release doesn’t change my opinion at all.

When We Hit Diminishing Returns

I will say one thing about Gemini 3 that’s significant: while we don’t know how big it actually is, reporting and extrapolation suggest that it may be the largest pre-trained model ever. Just last month I wrote that models aren’t exponentially growing in size (and, if nothing else, size isn’t leading to massive performance improvements). It’s hard to do any concrete analysis when we don’t actually know how big it is, but I suspect that the “diminishing returns” aspect of size-scaling holds.

That doesn’t mean that OpenAI’s “Code Red” won’t make them throw bigger pre-training runs and models at the wall, even if it isn’t efficient/cost-effective/financially prudent, though.

Nonetheless, this kind of scaling isn’t going to make a night-and-day difference. The bigger question is what these model companies will do to make themselves more relevant.

Business Models and Real-World Impact

One of my predictions is that the word “agentic” to describe AI will go away. Eventually, all AI will be agentic. Why wouldn’t it be? The real promise of AI isn’t that it will get better and better at chatting with us and summarizing articles—it’s that it’ll go and do stuff for us.

In a way, China is far ahead in this aspect:

The real split is over where each country’s tech companies believe the profit will land. China bets on applications; America bets on the model itself.

- Grace Shao in “China and the US are Running a Different AI Race”

For example, MiniMax recently also seized headlines due to its M2 model release and various rumors about a Hong Kong IPO ($4B in public reporting, though I’ve heard higher figures privately as well). It has Talkie, an AI companion app with subscriptions, microtransactions, etc. It threw Hailuo AI at the wall for image generation and has tried to push it into various applications.

There’s also just been way more AI rollout in online shopping (see Yaling Jiang’s article here) or it being widely used (and touted) in the Chinese insurance industry or even in entertainment with AI-native dramas. Aside from these “documented” examples, I’ve heard all sorts of insane AI things getting rolled out in WeChat, individual cafes, schools, and pretty much every level of society.

US adoption has been much more in coding and trying to use API calls from companies like OpenAI and Anthropic. Ironically, even in the US, for those utilizing fine-tuning or creating “GPT-wrappers,” open-weight, permissively licensed Chinese models are becoming more popular.

“Agentic AI” Will Just Become “AI”

Every major model maker has various agentic products (Claude Code, Codex by OpenAI, and Antigravity by Google, just to name the coding agents). Although I literally just named coding agents as one of the main things happening in US adoption, it’s still a good example of things to come.

Specifically, AI that does things for us, whether it’s researching a vacation and then booking tickets, or managing our calendars autonomously, or monitoring our home temperature and managing it based on things it asks us…

Or on the enterprise side, monitoring and helping decide how to scale out (or back) cloud infrastructure, triaging and managing customer service requests, or automatically surfacing important business metrics…

All of these kinds of applications are within reach of our current AI but need to be “productized” to do so. As we start running out of just pure model performance, this is likely going to be the more interesting competitive landscape (along with differentiated models, like Google’s Nano Banana, which is honestly much better than its competitors in image generation).

If anything, this is the “risk” to “GPT-wrapper” companies. As the model companies pivot to getting things done, rather than having better benchmarks, there’s a danger of a lot of companies trying to do these things getting Sherlock’ed.

All this will be far less visible and flashy than what we’ve seen so far—which have been the heady, exponential growth days of an early technology with a lot of low-hanging fruit. However, I’d argue that branching from just better models (on paper) into doing things will ultimately be much more impactful, relative to any single big, flashy model release.

In a way, you can see it similar to how the Internet evolved. Everything getting a website wasn’t the real revolution. It was how it ended up enabling far more interesting business models on top of it later.

A Side Note on US and China

Ironically, in the past few days, I’ve said to a few different people who’ve asked about the Chinese model companies that their valuations are still mainly on potential—while OpenAI and Anthropic have “real businesses.”

I stand by that statement, even though it probably sounds weird juxtaposed to what I literally just wrote about applications a little earlier in this very article.

MiniMax, Moonshot, Zhipu, and more are all throwing stuff at the wall. My understanding, both from public info and from private conversations, is nothing has really taken off in a large enough way to really brag about or back valuations (if you have different info, please let me know!).

I expect something will catch fire eventually (experimentation is how you find out), but they’re still searching for “real businesses.” On the other hand, Anthropic is pursuing an enterprise business/API strategy that by all accounts is growing, and OpenAI obviously has its subscription business that is said to hit $20B of annualized revenue this year.

These are “real businesses,” with multi-billions in revenue that aren’t going to just go away. Though one needs to ask, relative to the amount of investment they’re putting into it, what is the “rest of it”? These have gotten impressively far, but to grow into their valuations, they’ll need to show more measurable impacts.

OpenAI kind of half-heartedly put out a shopping research tool, though the idea is good. In the midst of their “Code Red,” OpenAI apparently is also deprioritizing their efforts to create an ad-supported model—which ultimately would be the real replacement threat to Google.

These companies, even doing it in fits and starts, will get there. Google, after all, has embedded Gemini everywhere—even if you really don’t want it to do stuff for you in Google Slides or Docs.

Thanks for reading!

I hope you enjoyed this article. If you’d like to learn more about AI’s past, present, and future in an easy to understand way, I’ve published a book titled What You Need to Know About AI.

Sign up to get a free preview of the introduction and first chapter here.

You can also order the book on Amazon, Barnes and Noble, Bookshop, or pick up a copy in-person at a local bookstore.