This is the fifth interview I’m publishing in my series of expert conversations for my upcoming book, What You Need to Know About AI. You can now get a sneak peek of the book’s introduction and the first chapter here!

Today, I talk with Brandon Basso about the challenges and breakthroughs in autonomous vehicles—particularly the critical but often overlooked role of simulation.

Brandon’s background spans drones, robotics, and self-driving cars. He worked previously at 3D Robotics and Uber ATG and currently leads simulation and evaluation at Cruise (recently acquired by General Motors). We cover everything from safety standards and regulatory partnerships to why variation and coverage in simulation are some of the toughest problems in AI today.

You can read the full transcript below or listen to the conversation on your preferred podcast platform.

You can also check out some of the previous interviews I’ve published:

Dr. Joshua Reicher and Dr. Michael Muelly, Co-Founders of IMVARIA, about the use of AI in clinical practice

Tomide Adesanmi, Co-Founder and CEO of CircuitMind, about the use of AI in electronic hardware design.

Alex Campbell, Founder and CEO of Rose.ai, about the use of AI in high finance.

Devansh, Co-Founder and Head of AI at Iqidis and author of the Substack Artificial Intelligence Made Simple on how AI could impact law firms.

If you’d like to get notified when the book is published later this year, you can sign up below and even get a sneak preview of the first chapter!

Brandon Basso is the Senior Director of Simulation at Cruise. He formerly led AI and Robotics teams in behavior planning and control at both Cruise and Uber ATG self-driving car programs. His work at Cruise enabled one of the world's first commercial deployments of self-driving cars in a major US city. Prior to working on self-driving cars, Brandon was the VP of Engineering at 3D Robotics, leading the hardware and software development of the first AI-powered commercial drone.

Brandon’s PhD Research focused on developing a novel reinforcement learning framework using Semi-Markov Decision Processes for solving complex, multi-agent routing and scheduling problems. This work enabled scalable, "variably autonomous" systems that learn from both simulation and human feedback (reinforcement) to navigate stochastic environments and adapt to dynamic tasks.

Topics Discussed

First Projects and Early Inspiration

Current State of AV Deployment

Can We Trust Deep Learning Models for AVs?

Moral Dilemmas and Regulatory Frameworks

Hard Technical Problems in AV Deployment

Variation and Corner Cases

The Simulation Frontier

Edge vs Centralized Compute

Misconceptions About AVs

AVs and the Future of Work

🎧 Prefer to listen or watch?

You can listen to this conversation as a podcast or watch the full interview on YouTube here.

First Projects and Early Inspiration

James: Great. It is awesome to have you, Brandon. It's been a while. I think it'd be great to just start out with some of your background and history, especially since you have such a fascinating one with the drones and self-driving cars.

Brandon: Yeah, absolutely. Thanks so much for having me, James. It's a thrill to be here.

When you and I met in grad school, I was working as part of this group called the Vehicle Dynamics Lab and doing a bunch of different projects having to do with unmanned aerial vehicles and controlling groups of them. Interestingly, at that time autonomous cars weren’t the hot topic; they were the ones off to the side.

Later, at 3D Robotics, I got to build both enterprise and consumer drones, leveraging my background in control theory and estimation. As autonomous vehicles became more prominent, I moved to Uber Advanced Technologies Group and then to Cruise, where I now lead simulation and evaluation.

I think this is one of the hardest last frontier challenges for autonomous driving and for AI in general.

How do you evaluate if it's good or correct or safe or what those things even mean in the first place? How do you do it at scale, and how do you do it when you're shipping a production software system on the road?

Those challenges are ones that are also reflected within the generative AI space. The difference is that what a car does has a tangible, real-world impact. Much more so than a string of text.

I remember taking a class with the classic mechatronics thing where you floated a ball bearing and wrote the microcontroller for it.

The connection of math to the real world was just super fascinating for me. From there it was on to whatever vehicle or robot I could get my hands on to go work on and control.

I also got to do a really interesting senior design project. It was something that was part of a lot of mechanical engineering curricula at the time: building a small robotic water strider. It’s one of those little bugs that sit on water. I got to both machine it by hand and then work on the little control system that moved it around. It was a super lightweight robot too, which was cool.

It’s really interesting, again, to use math to handle problems in the real world. I think we’re at a very interesting inflection point for that as well.

Current State of AV Deployment

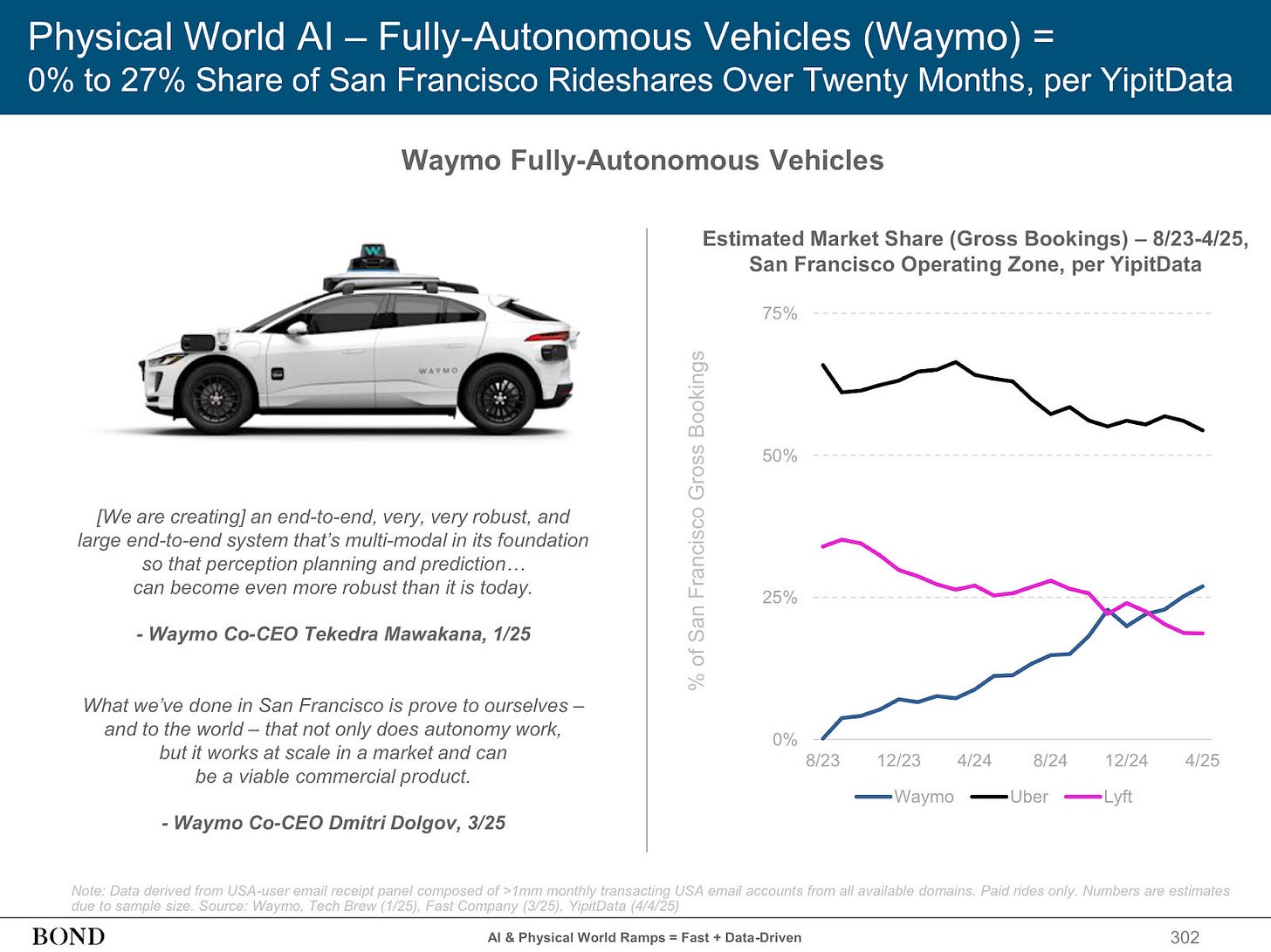

Brandon: For anyone who lives in San Francisco, it's impossible to not see autonomous cars being driven around. There’s been amazing progress that companies like Waymo have made recently, but there’s also the incredible scale of Tesla with full self-driving.

Yes, it's taken a long time to get here—and yes, there's still a long way to go. I can get into what I think the challenges are there in a second. But it's important to note that today, I can walk outside my office and get in a self-driving car and take it to basically wherever, and it will work exactly like an Uber in many ways.

That's a huge testament to everyone who worked in the industry going on back to the original DARPA Grand Challenge people.

I think there were several unlocks in the industry that got us to where we're at today. Early on, a big unlock was hardware. Sensing hardware, in particular, became more commoditized, and we were able to buy it from Tier-1 suppliers.

If you were to start a self-driving car company today—an autonomous vehicle company—you'd probably buy the vast majority of compute hardware and sensing hardware off-the-shelf.

That is very different from how it was when I started in the industry, and especially different from the DARPA Grand Challenge days—where almost everything was completely bespoke. So hardware was a big unlock.

Obviously another big unlock was just AI. Computer vision, of course, early on, but deep learning techniques and moving away from rules-based systems were significant. I think what a lot of companies found was that their rules-based systems worked well up to a point.

There is an interesting thing that happens when you drive more and more, and you push those systems to their limit.

Because they're performing better and better and better, you see fewer examples. And because you see fewer examples, you have less feedback to drive improvements directly back into the system. Getting there, though, was another big unlock.

This is really glossing over tons of other achievements that happened in really boring stuff—like dev tools and build systems and all these things.

The amazing thing about self-driving car companies is they are research labs where you're really doing cutting-edge research—but you're gluing that together with an actual production software system that's shipping code. That just requires a massive amount of infrastructure to work.

And some of the most impressive parts of the industry and at Cruise are really just dev tools, build systems, and software frameworks. Maybe it isn’t as much in the forefront as the stuff driving the actual car, but it’s still hugely important.

Because they're performing better and better and better, you see fewer examples. And because you see fewer examples, you have less feedback to drive improvements directly back into the system.

Can We Trust Deep Learning Models for AVs?

James: So I've been seeing both kinds of arguments out there. It's like it's deep learning. It's a black box. We can never know how truly safe it is. And this is a more dangerous area than, say, a Netflix recommendation or a chat thing. But at the same time, it's so much safer than humans. Maybe regulation is just getting in the way. I guess just throwing those two contrasting points out, like, what are your opinions and thoughts on that?

Brandon: Yeah, so those are two related points that are often wrongly conflated by the media. Sure, they’re related, but not the same. One concept is how can you verify an AI system—a system of systems—or a real-time system? How do you prove any properties of it? Secondly, what is the safety of the end product from a metrics or evaluation point of view? I think about both of these a lot because at least half of my job is evaluation.

For the first question, I don’t think it’s the case. I don’t agree that you can’t evaluate models. Anyone who works on ML models knows that you can use all sorts of rich tools to see what is happening exactly with each weight in a network. Even for an end-to-end model, you can still design it in a way such that you can introspect and measure certain things.

Now those signals might not have as tangible an interpretation as something like an analytical control system—where you can directly measure Kalman gain or whatever.

I do think there are legitimate concerns about a complex system if it’s deployed and just sent out to people without a thorough evaluation. There definitely could be problems there. But that’s not due to a lack of evaluation techniques. That’s just a lack of rigor. Evaluation is a hard problem, but it’s not unsolvable.

Ok, so that’s on the evaluation of models.

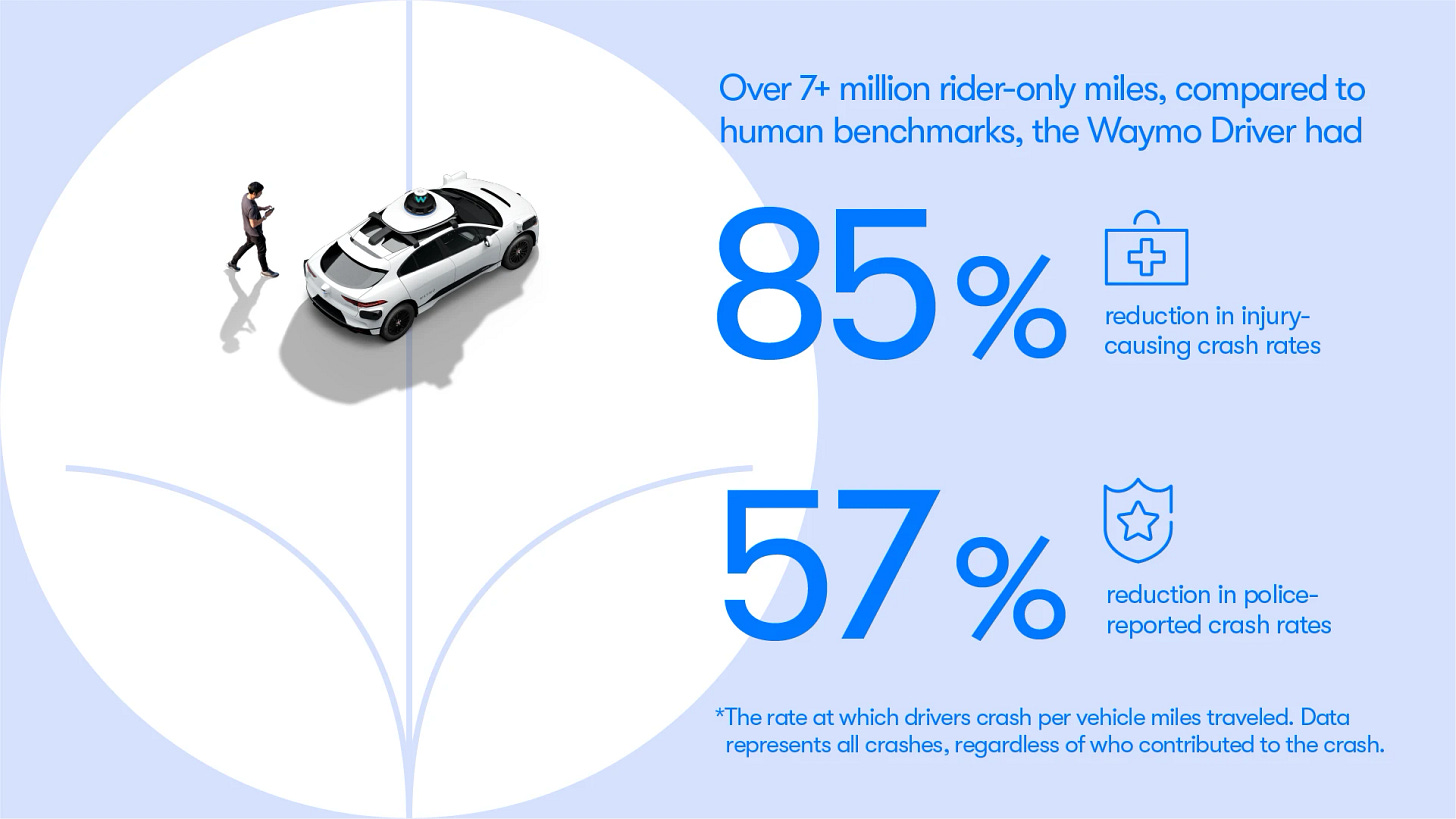

On the safety of these systems, Waymo and many other groups have published statistics on this. I think it’s hard to deny that lots of these deployed systems, especially the current robotaxi fleets, are safer than humans on average. I think that’s very clear.

But what if you’re visually impaired and need to pull over? What if you need to get out, but there’s a big curb or it’s unsafe to do so? Those are very hard problems to solve. There are other cases like that where it isn’t strictly about collisions. There are things beyond mere safety—like comfort—that are safety adjacent and that are much harder to measure and assess.

Now, I think that it’s my view as a user of the technology and my understanding of the statistics that, in many cases, they are better than average humans. The question is, what’s the bar? Is it to produce an average human driver? My interpretation is no, absolutely not. It must be clearly much better—call it 10X better. And it needs very strong data to back it up.

In other sets of circumstances, you can have a looser bar. Maybe the robot car does things that are unintuitive—but not necessarily unsafe. And there is a class of things that you absolutely do not want the robot car to do. There is a set of things that most people would look at and call inexcusable, even if a human did it, or maybe things that a human would never do. Bucketizing the behavior into categories like this is hard.

That’s what companies are trying to do now. What are things we need to do 10X better than humans? What are things that it’s ok to be just as good as a human? And what are things that can just never happen at all?

That’s where I think we are at in evaluation. Long story short, I think it’s hard to deny the performance of many of these systems, and they’re only going to get better as you add more data.

Moral Dilemmas and Regulatory Frameworks

James: That gets into a kind of question about what happens in an accident—if there’s a “trolley problem” question the autonomous vehicle is confronted with. Who chooses? Who takes the liability? Who has the sort of moral obligation around it? And when it is not a human driver, how do you suddenly parse these things?

What are your current thoughts on the regulatory environment? What are your thoughts on the current state of self-driving car technology and the legal or even just some of these moralistic issues surrounding it?

Brandon: Well, I have to say, this is starting to get way, way outside of my domain. I think it’s really important, so I’ll just state some basic, functional things.

It’s really important to work closely with regulators in any city or town that these things are deployed in. We need to view them as partners and customers. Because they have their own constituents. We need to present data to regulators or stakeholders like mayors or governors. And they’re a customer, essentially. I think it’s super important that they get treated as such as part of the overall landscape. It’s not just about the person in the car taking the ride. It’s about all of the people outside too.

All of these people need to make the decision about whether or not it’s a good idea to deploy. That’s just my baseline answer. Honestly, I haven’t heard the trolley problem question come up in a while, which I think is a good sign. I think it’s a sign we’ve moved past these sorts of semi-false binary choices. The reality is that the car is engaging in a decision-making problem process just like a human, in some sense.

They’re weighing a bunch of probabilities of outcomes, weighing their own response time and understanding, and then making a choice. There’s nothing moralistic about that.

And a lot of this is supported by data, at the end of the day. What do human drivers do, and how do they act in certain scenarios? The real difference with a robot car is two-fold.

The first is that it has a perfect understanding of its capabilities versus you and I as drivers. We have an imperfect understanding of our capabilities.

Secondly, it has a far greater understanding of the variables at play than a human driver. What is the maximum braking force or acceleration that it can apply? Whereas you and I don’t necessarily immediately jab on the brakes with a 10G deceleration or whatever.

There’s a much better understanding of the system from the robot car’s side—but does it make the right decision at the end of the day? I think that’s a question for the insurance adjusters.

Like I mentioned back when we were talking about evaluation, one of the really big problems for evaluation is compartmentalizing what can never happen from a regulator’s point of view. That’s why we need those close partnerships with them.

What is acceptable at a certain error rate that we can define, and then what needs to be superhuman—something that is ten, twenty, or a hundred times better than a human?

That's the contract that needs to exist. And from what I read in the news and what I've seen, regulators have been getting a lot more sophisticated about this line of thinking. And it's not no collisions anywhere ever. It's thinking about it in categories and in a more nuanced way.

James: Great. And that makes a lot of sense in terms of hopefully we keep getting a better societal understanding of what our relationship is with a lot of this AI stuff. But I guess in terms of thinking about this, you were talking about there being a lot of hard problems within the self-driving car space. I think a lot of people underestimate that.

Hard Technical Problems in AV Deployment

James: Not to caricature certain people, but some grad students come out, and it's like, “I did SLAM in my grad work! It seems like I should be able to just get a car going around San Francisco and have it be able to figure it out. What's so difficult about that, right?”

So what are the really big challenges in terms of deploying these systems to the real world that you encounter in terms of self-driving cars in this area?

Brandon: Yes, one challenge I mentioned before and think about all the time is evaluation. So, is working on an autonomous system very hard from an architectural, modeling, and data perspective? Absolutely. Super hard. I worked in that area for a while. Huge advancements in the space, though a super hard problem.

That said, we’re starting to see these systems really hockey-stick in performance in all sorts of road conditions. One problem that is still very challenging is what is “good” in the context of these systems? How do we evaluate performance at incredibly high fidelity offline—because having incidents in the real world is expensive and problematic?

Getting it right comes down to a couple of key things. One is, do you have the right data in your test set? Do you have the right coverage? That’s one way to put it.

Measuring coverage is a scientific question, but you need to understand what data you have in your test set and your evaluation set and what you don't. Where you don't have the right data, you need to go generate that data from scratch. And that's one of the big missions of simulation teams: generating those examples to evaluate against.

Once you start generating simulation examples, you bring in a whole host of questions about validity, fidelity, or the realism of those examples. And that's an entirely different science question to go answer.

Once you have the examples, you need to be able to score them. And it needs to be—I think this is actually subtly one of the hardest parts—a high enough signal-to-noise ratio. You need to be able to show it to a developer at the speed that their development cycle works on, which is hourly or maybe daily. It needs to be enough signal that they can then use it. They need to be able to look at it and know what’s going on, not be confronted by a million different potential failures without a clear reason why.

And that's hugely challenging. We've moved way past the point where you just have static test cases that are binary pass/fail. Oh, did you hit this object or not? Of course, that’s a part of it. But we're way beyond that now. Unless you can focus the developers—the actual people working on the autonomy stack—and on the actual problems, contextualizing them relative to other problems, system-to-road… it’s very hard to do development. It’s development, science… If you can solve evaluation, you wouldn’t immediately solve autonomy, but you can make the process go 10, 100, or 1,000 times faster.

Variation and Corner Cases

James: Yeah, totally. And I think this also plays into sort of the other side of it, which is why you need so much evaluation, which is variation. So I've talked to a lot of different startups. I've looked at lots of different things. One of the challenges for many of these different systems is you have the real world.

For some of these, like, you know, kitchen robots or whatever, it's way easier to do back-of-house than front-of-house. Why? Back-of-house, you have some control of it. You can create corridors. You can have some sort of restriction in the environment. Control.

Front of house, who knows? Like, someone could wander in the way, some baby, like, gets loose and runs in front, a dog is there, or something like that. There’s a very similar kind of environment with self-driving cars where all sorts of things could happen on the road. I mean, can you talk a little bit about the thought in terms of variation? How do you actually take all those things into account? Because we're talking about coverage, which, again, is sort of the other side of it in evaluating this. But, yeah, how does variation play into this?

Brandon: Yeah, variation is incredibly important because you only need to see one event in the real world once for it to be a huge problem. And maybe you never saw it before.

And back to this improvement cycle, as cars get better and better, they're just going to see those events less and less. And the chance that you don't have that next problematic event in your data set just gets higher and higher. So it's this very interesting sort of effect. So how do you generate meaningful variation offline?

The hard part of this challenge is… Well, I'll tell you what's not hard. It's not hard to take a simulation system like the one that I work on and say: given a scenario of a car driving down a street and a pedestrian coming out of occlusion, generate a dense sampling with a billion different variations of this scenario along the parameter space of the velocity of the AV, the velocity of the pedestrian, the type of pedestrian, height, clothing, and the time of day...

You can very easily see how you can very densely sample this parameter space. That's not the challenge. The challenge is to give me the two variations in which the car will actually fail or not do well.

So how do you actually discover those? One approach is to just do that evaluation in a very tight, closed-loop fashion with the autonomy system—like an RL-style simulator. That's one way of doing it, where you just generate these billion examples, and you simulate, you train, and you score all in the same loop. That's one.

There are more formal methods for exploring this parameter space and doing things like hazard analysis to discover where the challenges might be. And that might be an important thing to do from a regulatory perspective. Specifically, knowing where you can say definitively: “I've covered the following cases...”

One really interesting way of doing this is to start from an example that did happen in the real world. Maybe that example was not problematic or was merely problematic-adjacent. You can ask a counterfactual about it. Well, what if the person popped out a little bit later? Or can you perturb this scenario in a way that makes it harder for the AV to act correctly?

That sort of perturbation approach, I think, is a nice little cheat code where you start from a scenario that you already know is plausible because it happened in the real world, and you ask the system to densely sample around it.

So variations are incredibly important. It's not hard to generate a lot of them. It's just very hard to find the meaningful ones. And there are a couple of different techniques that you can use. One technique that I think is not used enough is just looking at examples that are happening out there.

There are examples generated every single day from human-driven cars or other autonomous vehicles from other countries. There's so much driving happening in the world right now. And the data capture from that is across every car that's on the road. So if there's some way of capturing that, I think that that's also a very useful signal.

The challenge is to give me the two variations in which the car will actually fail or not do well.

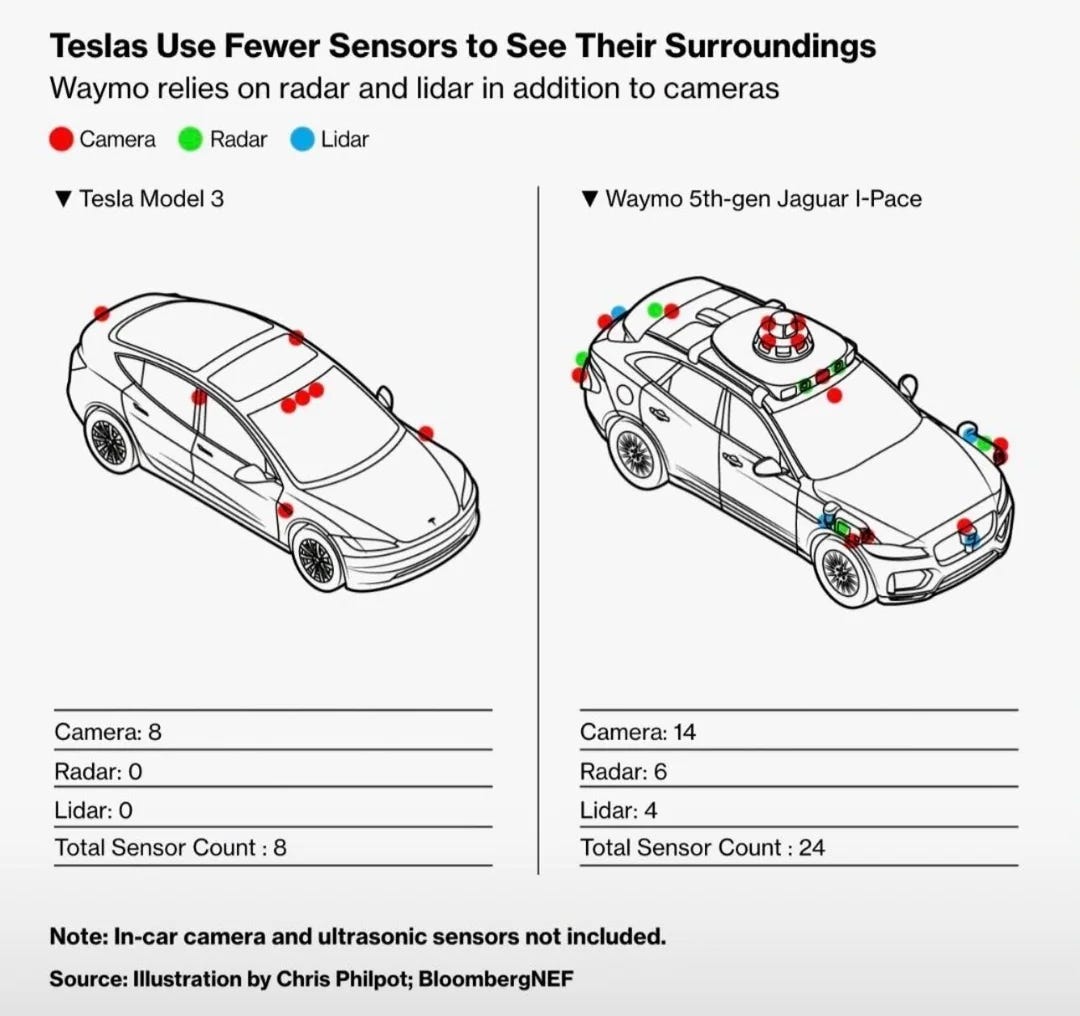

James: So, there are a lot of different ways of approaching autonomy. HD maps, LIDAR, pure RGB like Tesla… What do you think of a lot of those different methods? How do you think through the problem? What is the appropriate modality of sensing? How do you actually work through some of those things?

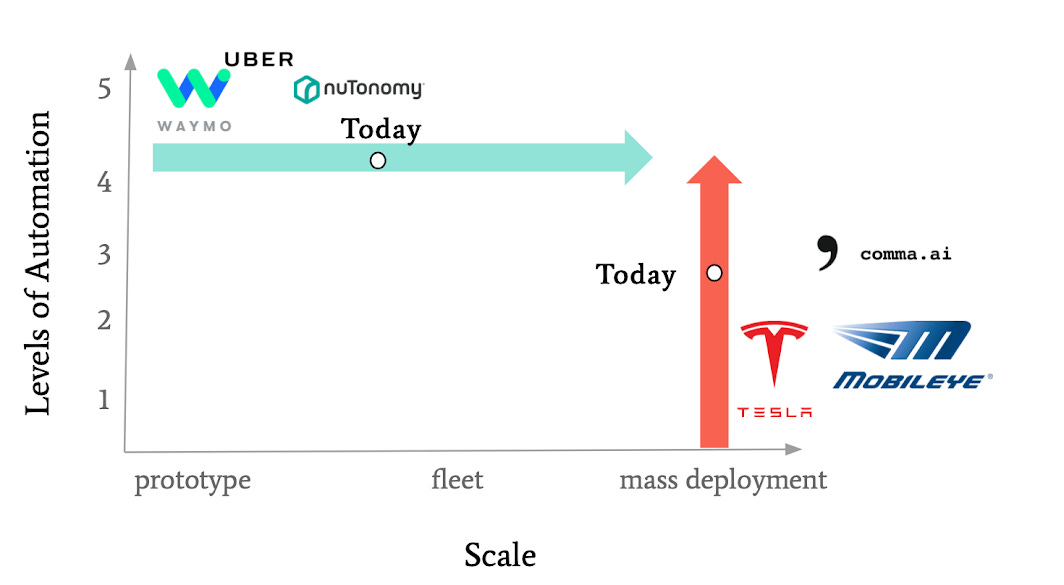

Brandon: Yeah, I think that all autonomous vehicle companies basically live in a 2D grid in terms of their approaches.

On one axis in the grid is capability, very broadly speaking, and the other axis is the cost of the system.

The bet of a company like Tesla is that they can start from a very, very inexpensive system and drive the capability up over time. One key element to that approach is massive data capture and data scale.

On the other end of the spectrum, you have companies making the opposite bet—which is a fleet-style bet where you can just deploy this to a large fleet. They start from an extremely expensive system and then cost it down over successive hardware iterations.

I know the media likes to contrast these two. At the end of the day, it’s just a cost-vs-capability tradeoff. Do you want to have a low BOM cost that you can afford to put on a ton of vehicles but have limited capabilities… or start with a very expensive system, but know that we can’t make a business out of it without reaching certain cost efficiencies. These are business decisions.

In the early days, we kind of just needed to make a bet. I think the jury is still out on this. You see Waymo and other companies investing huge amounts of money in their approach and doing incredibly well. You also see Tesla and all sorts of competitors from China now investing in that style of approach and doing incredibly well as well. The answer as to which “wins” is not clear to me.

I’m sure that’s a very unsatisfying answer, but I think that’s all wrapped up in this 2D capabilities-vs.-cost tradeoff.

The Simulation Frontier

James: Let’s talk simulation. Even a lot of the LLMs and frontier models are now using different RL-type loops, simulation, synthetic data, and other things to help drive performance higher. But one of the questions that people have is like, how far can you take this method? How good is the synthetic data? And also, just harkening back to the Nature paper that talked about this, will you just get model collapse and slop everywhere if you just keep going down this path?

Brandon: Yeah, I think you could take it really far. But also I have a vested interest in saying that. I was actually just reading a paper about some advances in RL simulation. I don't think that we've seen the benefits of this technology yet on the road, but from what I've seen, I think there are a couple of amazing things that can happen via using these techniques.

One is you really speed up the developer loop, which just means you have more shots on goal to get it right—that's a very practical thing. Two is you're able to generalize from one set of behaviors that the car has to another set of behaviors in a whole variety of different methods in a very robust way.

And then the other thing, not really specifically related to simulation but worth mentioning—it's really interesting seeing what's happening with research on distilling large models into smaller models that still have incredibly high performance. I think that's a really useful insight.

I imagine that type of insight is going to drive a lot of the work that's going to happen in the future, where maybe that large model never goes on the car, but future versions of it do. Or you have distilled versions of those that go on autonomous vehicles in the future.

All of this stuff is predicated on having a simulator that can scale at different levels. We can start from this very tight inner loop—it’ll be super fast, maybe lower fidelity, but with super fast rollout generation. This can push all the way to a more end-to-end style simulation where you're validating against a bunch of test cases or something like that. How you build those simulators… you can do it a million different ways.

The point is that it’s at the center of all of these different development loops—from the super-fast inner loop to the outer loop, end-to-end use case. It’s incredibly impactful for development—not just validation, which is what it was primarily used for years ago.

Edge vs Centralized Compute

James: And along that line too, just another one of those things I think kind of ended up in the middle… There used to be very strong opinions on whether or not centralized computing or edge computing would be more important and would take over most of the spaces—whether it's self-driving cars or a lot of other things. In reality, I feel like we've seen in a lot of industries much more of a hybrid.

Do you have any thoughts in terms of that and what the future is in terms of more centralized computing, more edge computing, and smaller distilled models locally? Like, how do you think through if someone were to ask you the question about what you see going forward?

Brandon: Ultimately, you can't get around the fact that this is a real-time system. This is one of the paradigms I think that has held true over the past few years that hasn't changed with all the recent technology developments. If you're driving a car down the street, it ultimately needs to respond on the order of maybe tens of milliseconds. What is the comms link that you’re willing to put that over? I can’t think of one—just from a physics point of view.

Now, wouldn't it be great if you had some cloud computer doing that, maybe in some distant universe… Maybe that’s possible due to some telecommunications technology I'm not aware of or that hasn't been invented yet. (James's comment: like an ansible!) But the other thing is it's not like the cost of the computing hardware in the car is necessarily prohibitive. So I don't see a huge imperative to move off-board other than the following: we already know that robotaxi companies, via lots of reporting, have heavily, heavily leaned into remote assistance systems.

So what do those systems look like? It's the vehicle making a call to a human—phone-a-friend—or having a human tell it what to do. Well, you know, why can't that “human” be a model instead? That's one area where I think there could be more centralized off-board compute used to help aid AVs.

And I'm sure that that sort of thing is happening out there already. You know, why not? Show the model a picture of the scene in front of the car and ask, “Where should I pull over?” That sort of thing has a lot of relevance going forward. It makes the user experience better—but it really drives down the operational costs of these companies that spend a huge amount on remote ops.

Misconceptions About AVs

James: Maybe let's talk then about where you see some of the biggest misconceptions about self-driving cars. This is a little bit open, but lots of people have lots of opinions now, especially since it's much more available and people can see it more.

Brandon: Yeah. I'll give you two. I don't know if they're misconceptions so much as they are a lack of understanding or awareness.

One is that self-driving cars are still a distant technology. It just doesn't feel that way to me. Now, I think there are deployment challenges, but everyday I see a new launch or a partnership with a new ride-hail company or a new city. And I think we are really in the hockey stick growth moment. Is that a mirage? I don’t think so based on my personal experience getting in the vehicles and seeing them myself. But not many people, relatively speaking, have experienced it. It’s a bunch of people in San Francisco and a few other cities—but I think that’ll change very soon.

The second is… This was probably my biggest misconception, honestly, before I got in these cars. As you know, I worked at Uber for a while. Great company. I love the product and love what it did and how it works and all the ride-hail products. I think they're hugely transformative.

That said, there are downsides to getting into an Uber with a person. Uber and Lyft work very hard to limit those downsides. But the thing that struck me most about getting in my first autonomous vehicle ride in San Francisco was just… how relaxing it was and how much I truly enjoyed it.

And, you know, internally, we had all these concerns about things like, and we had all sorts of metrics around, well, how much longer is it going to take for the rides? Because you can't route in exactly the way a human would. Or, you know, rides would take longer because you're following the speed limit and a variety of other things.

In more circumstances than I think people realize, you'll be perfectly okay with some of the cons of a self-driving car. It won't break the law. It will drive slower and more deliberately and maybe take a little bit more time. I think people are going to be completely okay with those because of how much peace of mind it gives you to be in the backseat of something that drives so confidently and so safely—and where you just don't have to interact with another person.

I don't know about you, James, but when I go home at the end of the day or go out with friends—maybe this is just more a product of having two kids now—I don't want to talk to anyone. I just don't. I want to sit in the back of the car and zone out. I want it to not be so jerky that I can look at my phone and not feel sick.

This is really not to take anything away from the amazing work of Uber and Lyft. I am a huge user and fan of the products. But there is something very special and nonintuitive about getting in a car without a driver. I think when people experience it they’re really, really going to like it.

James: Yeah, it makes sense. And I've actually heard a similar sort of thing over and over again from different friends who have come in from out of town, get in a Waymo, and it's like, wow, I don't need to worry about anything. I just chill out and don't need to say hi.

Brandon: Yeah.

I think people are going to be completely okay with those because of how much peace of mind it gives you to be in the backseat of something that drives so confidently and so safely—and where you just don't have to interact with another person.

AVs and the Future of Work

James: Yeah, which is fascinating in terms of that sort of human dynamic. But I guess more than any other industry that I've done these interviews or talked to, it seems like once these things get off the ground in a really big way, it would actually take away a lot of the Uber drivers', Lyft drivers', and taxi drivers' jobs. I think in most cases I've talked with people on, whether it's for lawyers or for doctors or for other things, it's not the case. It's a productivity tool.

Even in the case of one of my companies that I'm on the board of, which does pizza-making robots… it doesn't actually take anyone's jobs because there are too few people already, and there's only one person in a Domino's that's supposed to have three. But in this case, it does really seem like it's going to take away jobs. Like, what's your impression of it, and what's your thought process on it?

Brandon: I agree with Dara (CEO of Uber)’s take. Will it take away jobs over time? Yeah, probably. We have to be honest about that. It'll be slow, though. It'll happen in certain cities. I think it will also open up new markets that are hard to imagine. I think we’ve learned that the demand for transportation is nearly limitless.

It was impossible to imagine Uber existing prior to the iPhone existing. It will be impossible to imagine some industries that start up as a result of self-driving cars that you could not have before self-driving cars, and those are just brand-new revenue streams that maybe don't affect as much as we're imagining the human-driven Ubers.

These are all just hypotheses, and who knows? Of course, I totally get and, of course, empathize with the fact that this has the potential to disrupt people's livelihoods. I think you have to be honest about the fact that it probably will in some cases… But what's missed in that sentiment is: what new markets get opened up?

James: Totally. And the funny thing here is I remember seeing a lot of case studies, especially since I had worked in Africa and other places… I'm really excited to see the technology leapfrog.

Those regions didn’t have any landlines but ended up with cell towers and sometimes faster infrastructure than we did because they just skipped the old technology…

Brandon: That's right, no bullet trains or high-capacity buses for us. We're just going straight to autonomous cars and maybe time machines after that. Maybe.

James: What are you excited about coming up in AI, not just in self-driving cars?

Brandon: I've talked enough about self-driving cars already, so I won't bore you with any more of that.

I think, like a lot of people, I'm really excited about the current AI technologies and their chance of real positive impact in our lives. The whole concept of self-driving cars initially was that it would not only be safer but also give us time and space back.

I think automation in general can do that, and I’m excited about it with new AI techniques. I think about myself personally as a manager—how my job might change… be better or different over time using these tools. I’m not too worried about it reducing jobs—though it is possible. Instead, I think about how it can transform jobs so we can focus on higher-order tasks.

I have to say, recently I'm really interested in using these models for things in spaces like drug discovery. I think that stuff is fascinating. I think it's really relevant within our lifetimes. It's not too different of a problem from self-driving cars when you look at the shape of it. Obviously, tread carefully in areas with drugs, people, etc.

But transportation, energy, food, agriculture… most places where I want to spend my time is in those that have a real tangible benefit for humanity. I really like the promise of the current AI techniques in these areas, especially healthcare.

Thanks for reading!

I hope you enjoyed this article. If you’d like to learn more about AI’s past, present, and future in an easy to understand way, I’m working on a book titled What You Need to Know About AI that will be published October 15th.

You can learn more about it and pre-order on Amazon, Barnes and Noble, or Bookshop (indie booksellers).