Who’s Winning the AI War: 2025 (DeepSeek?) Edition

Same fundamentals, new unhinged vibes

The last few weeks have been a roller coaster ride. The latest craze has been DeepSeek’s R1 release, which Marc Andreessen has called an “AI Sputnik moment” and has been editorialized as “destroys the myth that China can only copy the West, it may even be poised to eclipse it…” (We’ll also see what happens now that DeepSeek’s image model, Janus Pro, just dropped…)

Meta, in particular, with an anonymous post on Blind and reporting from The Information has been characterized as in “general panic mode.”

Ironically, I started this piece a week ago with only the post on Blind that I was going to note was a general pattern of news cycle crazes.

Last December, Google was triumphant for all of a week as the latest versions of Gemini topped leaderboards and, notably, their video generation AI, Veo 2, crushed Sora. Then, OpenAI released o3—and seized the hearts and minds of the media (even though, in most ways, it was largely incremental to o1). Google was back, OpenAI was washed, and then it was the other way around all in the span of less than two weeks.

Then, the $500B OpenAI/SoftBank/MGX Stargate announcement during the opening days of the Trump Administration caused the normally level-headed Ben Thompson to write: “Stargate, The End of Microsoft and OpenAI.”

Who’s on top? What even matters anymore?

Don’t get me wrong. DeepSeek is here to stay, and R1 is genuinely a great step forward, especially since it is open-weight and well-documented with a paper explaining the process of creating it. I’m personally looking forward to running it locally at some point in the future.

Stargate is also an important announcement, assuming it moves forward, but is at least indicative—in combination with the spate of Executive Orders at the end of the Biden Administration—that the US is trying to get serious about building stuff for AI.

Neither, however, is something to start running around declaring some kind of paradigm shift yet, though. R1 is great, especially in being open and higher efficiency, but I fully expect it to be eclipsed by some other AI announcement in not-that-much-time. It just happens to fall into a narrative that the media has fallen in love with, in terms of a horse race between the US and China.

OpenAI has also kept o1 and o3 fairly restricted from use (with o3 only having benchmarks available), which left the public more awed by R1’s performance because most people have never played around with a reasoning model. That being said, it was probably because of cost—after all, as noted before, o3 could scale to a remarkable $1k/query. Even with DeepSeek R1’s greater efficiency, the company has started throttling signups, which I expect is partly due to cost/compute reasons (even though it’s claimed to be due to “malicious attacks,” which I expect is only partly true).

So who’s actually winning?

I started this piece originally because I was getting messages from people asking me, “What is interesting to invest in,” before this week flooded me instead with, “What do you think of DeekSeek?”

For better or worse, my answer is still largely the same as it was at the beginning of 2024. It’s boring, it doesn’t play into the horse race, but—in reality—the fundamentals have not shifted.

My answer then was: society will win from better AI, but (from an investment perspective) it’s very unlikely that the upstarts—OpenAI included—will be able to win against the big, traditional web companies that start with an almost insurmountable (and growing) advance.

OpenAI is trying to wow the world with $500B (or maybe just $100B) of spending with Stargate. But this kind of eye-popping number is far less impressive, especially as a theoretical, next to the spending that the big tech companies have been pouring into AI infrastructure.

Alphabet’s total capital expenditures—mostly for compute—were tracking to be $50B in 2024. During the same period, Meta was fairly casually talking about spending $40B in capital for data centers and infrastructure, with their CFO stating that capital spending will increase even more in 2025. On a lark, Google just put in a fresh $1B into their competitor, Anthropic, that has been more closely associated with Amazon—but why not, that’s chump change next to all of this other spending.

OpenAI reportedly spent $5B renting (vs. buying) servers from Microsoft (discounted!) and $3B on training costs (separately) in 2024. These numbers look enormous until you actually put them next to what the big tech companies already have in compute infrastructure and are continuing to spend.

If compute costs, especially with the rise of inference-time scaling, are an arms race, OpenAI is a small fry. Meta alone spent more in one quarter in 2024 than OpenAI was estimated to spend in all of 2024—and they give their model weights away for free (like DeepSeek, a fact that seems to be lost on the media this week)!

These numbers aren’t perfectly apples-to-apples. Buy vs. rent obviously makes a difference, but it’s also a difference that gets worse for OpenAI over time (even with Microsoft’s discounted rates). While OpenAI may be able to get more compute for their dollar in the short term, higher upfront capital costs will mean lower ongoing costs for all of their competitors. It’s not like we’re “finished” with AI development.

As OpenAI’s early emails show us, the company was started to prevent Google from simply walking away with AI leadership (and AGI) and was a desperate fight against the titan of the industry. That hasn’t changed.

On May 25, 2015, at 9:10 PM, Sam Altman wrote (to Elon Musk):

Been thinking a lot about whether it's possible to stop humanity from developing AI.

I think the answer is almost definitely not.

If it's going to happen anyway, it seems like it would be good for someone other than Google to do it first.

Will the spending curve fundamentally change?

Perhaps DeepSeek is showing us that scrappy labs can win against the titans, despite all of their advantages. If anything, that’s good news for OpenAI.

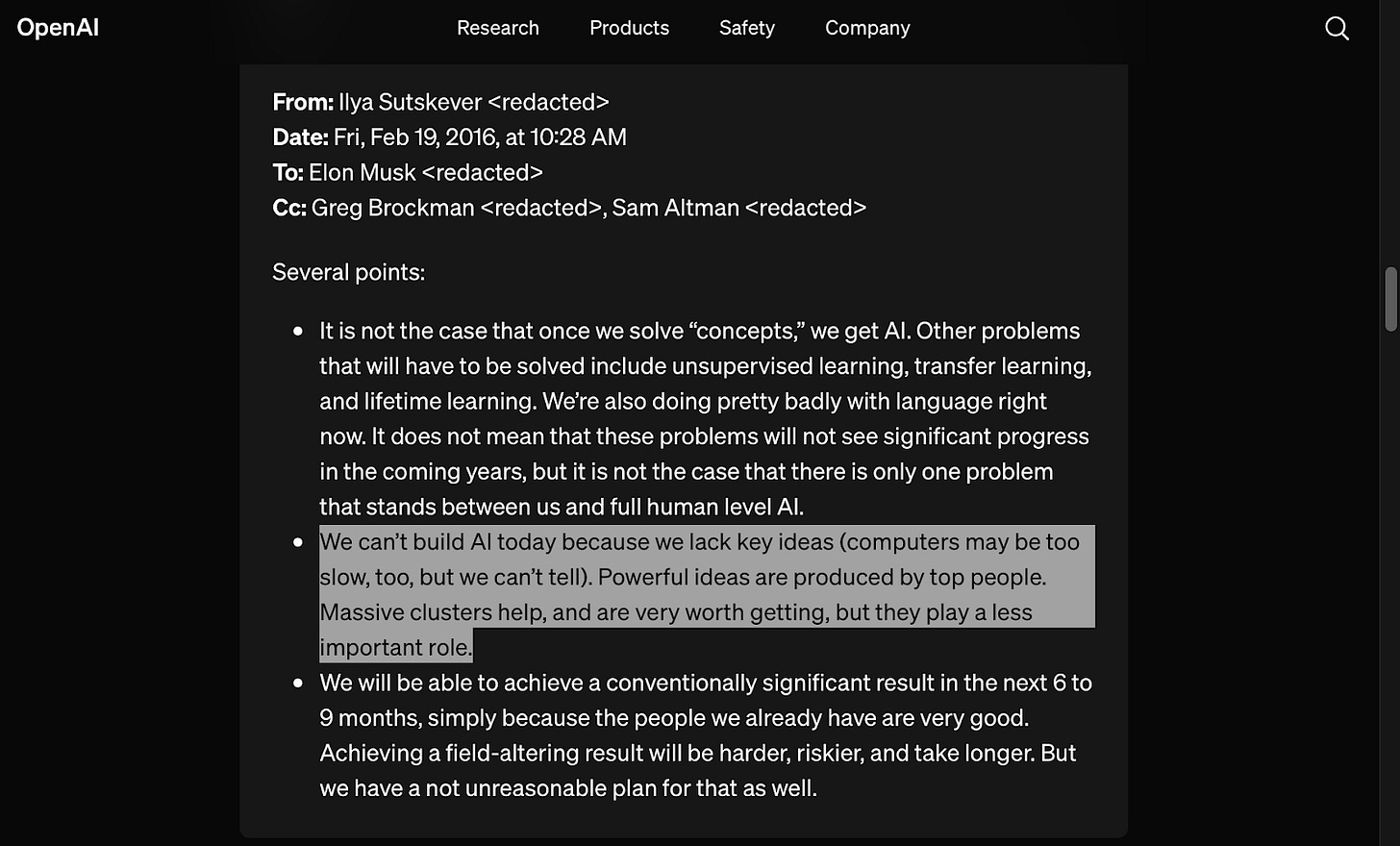

Again, make no mistake, DeepSeek has accomplished a lot—and I called out as much in my 2024 roundup. Additionally, I’ve personally been calling out for years that compute is not everything in AI development.

That being said, it is still important and DeepSeek’s numbers are not apples-to-apples to the US companies’ cited numbers. Nathan Lambert has a good piece on it here, but the gist is the actual costs are probably in a similar ballpark, “all-in.” US companies have an incentive to inflate their numbers (and impress on how difficult and expensive it all is) by including many costs, and DeepSeek (which is trolling western media to some degree, especially with some of their recent public statements) has an incentive to show how scrappy they are by excluding everything.

Compute still matters, especially for experimentation, as per, again, OpenAI’s internal emails years ago.

OpenAI and DeekSeek are both underdogs in this fight.

There is no moat

As the infamous internal memo from Google in 2023 stated, there is no moat in AI. There are models, data, and compute—and everyone has the same version of all of them. While this might be positive for startups, the issue is what I stated before: the big companies already have huge amounts of compute infrastructure already available and are only adding more.

Compute matters. Obviously, OpenAI and everyone else believes this (whether from the reasoning models, or just from the ability to experiment and iterate faster). ChatGPT exploded onto public consciousness first and got an early “mindshare” lead, but it just doesn’t matter.

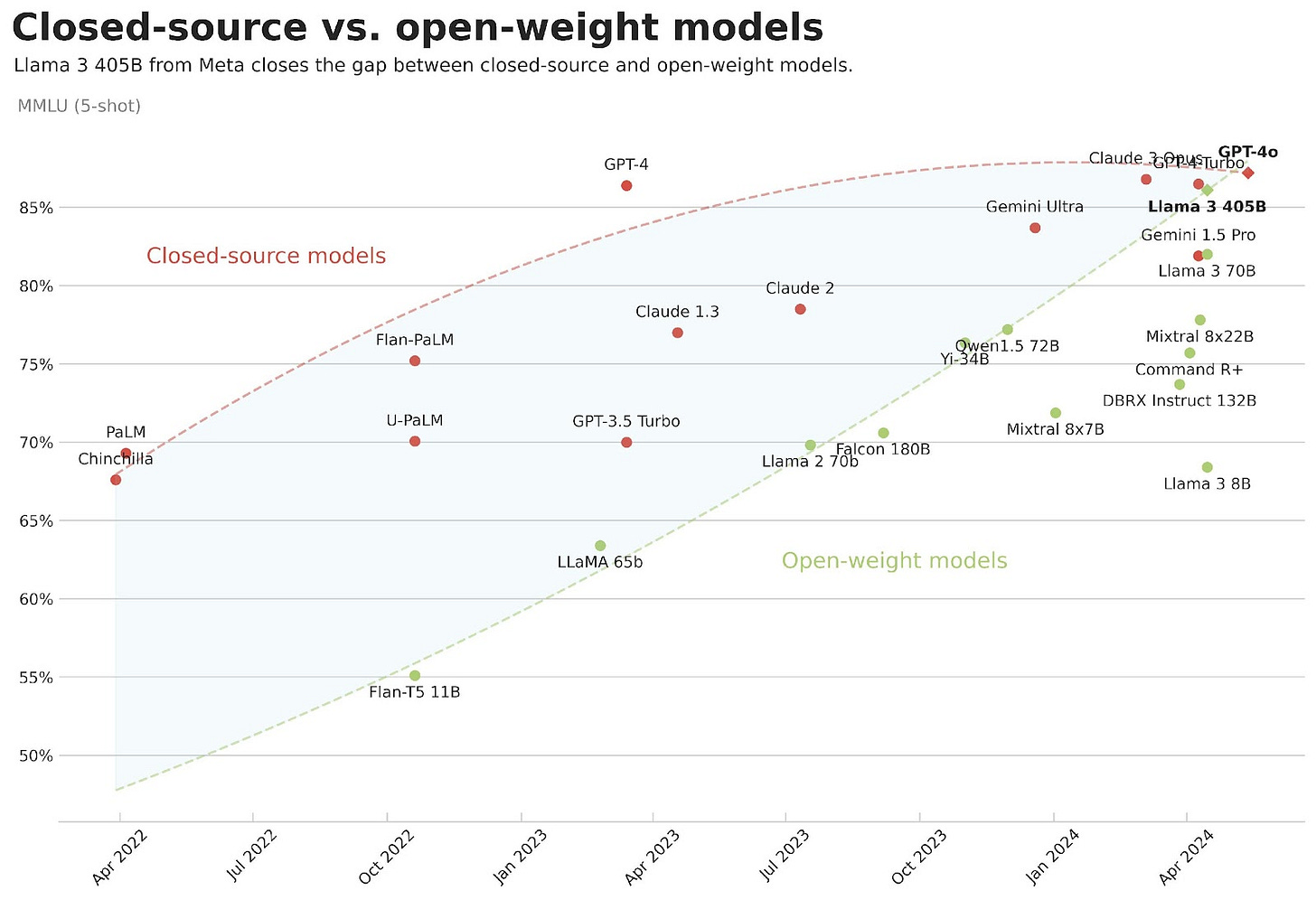

That early lead didn’t build a moat. And the sheer weight of spending still means OpenAI is fighting desperately, year after year, raising money to try to stay afloat just to stay on par with Google and Meta, in particular. Open weight models have been catching up and staying on par for a while now, damaging OpenAI’s ability to monetize their models.

The world’s fundamental structure for who has the lead in AI did not change. And it’s not going to change, no matter how much the media freaks out at every twist and turn.

They’re all still underdogs

Anthropic is an underdog. Mistral is an underdog. OpenAI is an underdog. DeekSeek is an underdog. They are all swimming upstream with every factor weighted against them except for being smaller, more nimble, and with less institutional baggage—which has been the only way they’ve kept up at all.

The crown is Google’s to lose1, with the other big tech companies waiting for the stumble, and Nvidia happily still selling GPUs to power it all.

New foundational models?

New foundational model companies, unless they’re going into distinct areas with proprietary data that they can protect, have no moat.

Drug discovery AI, material design AI, or robotic control AI… all of those could be interesting for “foundational models.” However, those areas are also much harder to come by—and arguably, could consume even more capital than the language models because real-world data is expensive to generate.

Thanks for reading!

I hope you enjoyed this article. If you’d like to learn more about AI’s past, present, and future in an easy to understand way, I’m working on a book titled What You Need to Know About AI that will be published in 2025.

Sign up below to get updates on the book development and release here!

This all being said, Google is probably still going to find some way to shoot itself in the foot. I couldn’t help but constantly think this while writing this—even while believing this is the fundamentals. Other folks who were/are at Google also tend to agree…

What a great piece! Thanks for clarifying the fog of information.